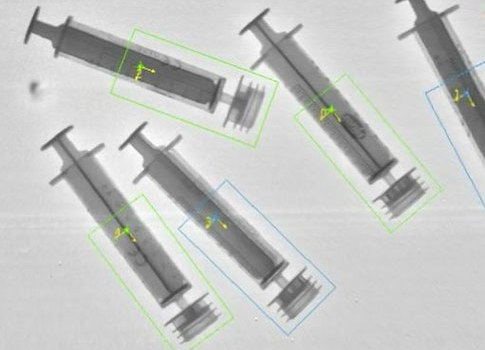

Visual servo control: Vision used for robotic or machine guidance also can be used for in-line part inspection to enhance product quality with traditional feedback systems. See photos, video.

Robotics and automated machines can use visual servo control for robotic guidance, enhancing motion control and improving product quality, while replacing traditional feedback systems.

Every year robots become more integrated into our everyday lives and less like characters familiar only in science fiction movies. The field of robotics is advancing in the manufacturing and consumer industries, but one of the main obstacles stalling significant growth in this space is that most robots are “blind” or unable to perceive the world around them. With little to no perception about their environment, they are unable to react to changing surroundings. Our eyes and brain act as built-in hardware and software that helps us perceive the world around us including the various depths, textures, and colors encountered during the day. Just as our eyes receive signals from our brain to continuously focus and adjust to light as we move around, the integration of vision technology with today’s software tools makes it possible for robots to see and react to their changing environment. It presents an opportunity to enter a wide range of new vision and robotics applications.

Vision-guided robotics

One of the most common uses for vision technology in robotics is demonstrated in vision-guided robotics. This has historically been applied on the factory production floor in areas such as assembly and materials handling, where a camera is used to acquire an image and locate a part or destination before sending coordinates to the robot to perform a specific function like picking up a part, as shown in the photos and short video clip.

Integrating vision technology in applications such as these makes it possible for machines to become increasingly intelligent and therefore more flexible. The same machine can perform a variety of tasks because it can recognize which part it is working on and adapt appropriately based on different situations. The additional benefit of using vision for machine guidance is that the same images can be used for in-line inspection of the parts that are being handled, so not only are robots made more flexible but they also can produce results of higher quality.

It can be expensive when a high degree of accuracy is essential for the motion components, such as the camera or motion system. Some vision-guided robotics systems use one image at the beginning of the task without feedback to account for small errors later.

Continuous feedback

Using a technique known as visual servo control solves this challenge as a camera is either fixed to or near the robot, and it provides continuous visual feedback to correct for small errors in the movements. As a result, image processing is used within the control loop, and in some cases the image information can completely replace traditional feedback mechanisms like encoders by performing direct servo control tasks.

Direct servo control accelerates the high-speed performance of applications such as laser alignment and semiconductor manufacturing among other processes that involve high-speed control. While the use of vision in robotics is common with industrial applications, it is becoming increasingly more prevalent in the embedded industry. An example of this advancement is found in the field of mobile robotics. Robots are migrating from the factory floor and taking on a more prominent role in our daily lives. Functions range from service robots wandering the halls of local hospitals to accommodate for healthcare system cost pressures and a shortage of doctors and nurses to autonomous tractors plowing fields to advance planting and harvesting efficiency.

See another photo and more application details on next page.

Autonomous robotic boom

Nearly every autonomous mobile robot requires sophisticated imaging capabilities, from obstacle avoidance to visual simultaneous localization and mapping. In the next decade, the number of vision systems used by autonomous robots is expected to eclipse the number of systems used by fixed-base, robot arms.

A growing trend is the adoption of 3D vision technology, which can help robots perceive even more about their environment. From its roots in academic research labs, 3D imaging technology has made great strides as a result of advancements to sensors, lighting, and most importantly, embedded processing. Today 3D vision is emerging in a variety of applications, from vision-guided robotic bin picking to high-precision metrology and mobile robotics. The latest generation of processors can handle the immense data sets and sophisticated algorithms required to extract depth information and quickly make decisions.

Robotic stereo vision

Mobile robots use depth information to measure the size and distance of obstacles for accurate path planning and obstacle avoidance. Stereo vision systems can provide a rich set of 3D information for navigation applications and perform well even in changing light conditions. Stereo vision technology is the practice of using two or more cameras offset from one another while looking at the same object. By comparing the two images, the disparity and depth information can be calculated, providing accurate 3D information.

While the increased performance of embedded processors has enabled algorithms for uses such as 3D vision with robotics, there still remains a range of applications untapped that require additional performance. For example, in the medical industry, robotic surgery and laser control systems are becoming tightly integrated with image guidance technology. For these types of high-performance vision applications, field programmable gate arrays (FPGAs) manage the image preprocessing or use the image information as feedback in a high-speed control application.

FPGAs are well suited for highly deterministic and parallel image processing algorithms in addition to tightly synchronizing the processing results with a motion or robotic system. This technology is put to practice, for example, during laser eye surgeries where slight movements in the patient’s eyes are detected by the camera and used as feedback to auto-focus the system at a high rate. Additionally, FPGAs can support applications such as surveillance and automotive by performing high-speed feature tracking and particle analysis.

Integrated technologies

Due to rapidly advancing technologies in processing, software, and imaging hardware, cameras are everywhere. Machines and robots in the industrial and consumer industries are becoming increasingly intelligent with the integration of vision technology. The accelerated adoption of vision capabilities into numerous devices also means that many system designers are working with image processing and embedded vision technologies for the first time, which can be a daunting task.

Valuable resources are available to system designers and others simply interested in vision technology. The Embedded Vision Alliance (EVA) is one such resource, which is a partnership of leading technology suppliers with expertise in embedded vision technology. The EVA is available to empower system designers to incorporate embedded vision technology into their designs through a collection of complimentary resources, including technical articles, discussion forums, online seminars, and face-to-face events.

– Carlton Heard is National Instruments vision hardware and software product manager. Edited by Mark T. Hoske, content manager, CFE Media, Control Engineering, [email protected].

Online

www.embedded-vision.com (EVA)