Some pioneering oil and gas producers find it easier to extract value from operational data on platforms that mimic data warehouses.

There is no longer an argument about the value of data generated during oil and gas exploration and production. The digital oilfield movement may have started as a means of cutting operating costs by automating tasks associated with equipment monitoring and maintenance, but it has delivered many side benefits.

There is no longer an argument about the value of data generated during oil and gas exploration and production. The digital oilfield movement may have started as a means of cutting operating costs by automating tasks associated with equipment monitoring and maintenance, but it has delivered many side benefits.

Many industry experts believe the chief benefit was showing oil and gas producers how to collect data that, when properly managed, can have much more strategic value than simply telling well operators when to repair or replace worn equipment. In fact, there is a growing belief within the industry that the data generated by the sensors and other automation devices sprinkled across oil and gas fields should be viewed as an asset, and considered just as valuable as a company’s most expensive production equipment or its most talented engineers.

Philippe Flichy, senior digital oilfield advisor for enterprise technology with oilfield services company Baker Hughes, is among those holding this opinion. He refers to data generated in the course of oil and gas exploration and production as "an asset that can talk," though he also points out that the value of what the data has to say is directly related to how well it’s managed.

Flichy offered evidence to support this position in a paper he recently presented to the Society of Petroleum Engineers. The paper, titled "Trusted Data as a Company Asset," cited a study in which oil and gas companies reported boosting overall profitability by as much as 6% as a direct result of effective data management. It also referenced specific companies, including Shell Oil Co., that have benefitted from effective data management in multiple ways, including seeing wells produce ahead of expected schedules and beyond anticipated capacity.

While these stories are impressive, they are still the exception rather than the rule because oil and gas producers lag their counterparts in other industries when it comes to practicing effective data management. With all of the information flowing through their operations, many oil and gas producers are, in essence, being forced to contend with what is known as "big data"—and they need tools to help them meet that challenge.

A quicker path to effective data management

As they typically do, technology vendors have responded to this situation with a new class of solutions. One of the most promising is called data virtualization, which is a method of simplifying the process of building an infrastructure for transforming Big Data into information that can be relied upon to make sound business decisions.

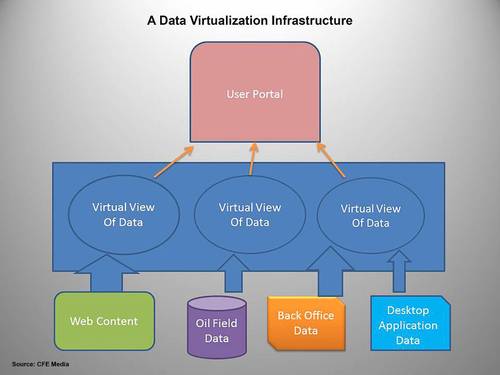

Building such an infrastructure requires integrating data generated across the enterprise in such a way that it at least appears to be housed in a central location. Once built, this infrastructure gives all users-no matter their position or location within the enterprise—quick, easy access to all the data they may need to do their jobs at any given time.

Building such an infrastructure requires integrating data generated across the enterprise in such a way that it at least appears to be housed in a central location. Once built, this infrastructure gives all users-no matter their position or location within the enterprise—quick, easy access to all the data they may need to do their jobs at any given time.

Historically, companies had four options for bringing data from multiple sources into a single platform, according to Phillip Howard, a research director with Bloor Research.

These options include:

- Custom integration, which involves hard-coding of links between applications that need to share information

- Enterprise application integration, which entails building an enterprise services bus and creating connectors (also typically via hand coding) for applications to pass information to one another along the bus

- Data replication, or creating multiple copies of data and placing it in readily-accessible repositories

- Extract, transfer, and load, which is taking summary information about data generated in individual applications and placing it in a central data warehouse that can be accessed by a broad spectrum of users.

Each of these options is a complex—and therefore expensive and time-consuming—undertaking, which is why they have largely been shunned by all but the largest oil and gas producers. In recent years, even the large producers have become less enamored of these options because none of them offers a particularly effective method for filtering Big Data.

Douglas Laney, a vice president with Gartner, is widely credited with introducing the concept of Big Data in a 2001 paper in which he said the three V’s of business data-volume, velocity, and variety—were forcing companies to seek new data management approaches. In that paper, Laney wrote, "The e-commerce surge, a rise in merger/acquisition activity, increased collaboration, and the drive for harnessing information as a competitive catalyst is driving enterprises to higher levels of concern about how data is managed."

Since Laney wrote those words, the conditions driving concerns about data management have only intensified. The volume of data most companies generate has increased exponentially. Oil and oil and gas producers have seen data variety expand to include feeds from the digital oilfield and various forms of unstructured data, such as documents created on desktop applications, email, social media posts, and audio and video files.

Conditions have changed so dramatically that Flichy’s paper, published in 2015, references the seven V’s of data—adding variability, veracity, visualization, and value to the mix.

Data must be trustworthy

Of these seven V’s, veracity may be the most important because it speaks to data accuracy. Inaccurate data can’t be trusted, and therefore has little value as a decision-making tool. "One of the biggest problems we have in the oil and gas industry is people not trusting their data sources," Flichy said. "So they tend to redo things. They find pieces of data in different places and stitch them together to do their own analysis. We often hear people say engineers spend most of their time looking for data; they actually are spending that time matching data."

To stop this cycle, Flichy said, "You have to create a single version of the truth, and you have to do that often, so people trust the data enough to feel confident in using it to make decisions."

Data integration is supposed to provide that single source of trusted data. However, few oil and gas companies can afford long, expensive data-integration projects, particularly at a time when the amount of data companies must manage is rapidly rising and oil and gas prices are falling. That’s why techniques like data virtualization are gaining popularity.

"We started a data virtualization project at Baker Hughes about four years ago," Flichy said, "and the results have been quite remarkable."

Baker Hughes created its own data virtualization platform, and dubbed it the "Baker Hughes Information Reservoir." After initially using it ensure that all departments could indeed have access to the exact same sets of data, Flichy said, the platform has evolved into a tool for increasing overall efficiency.

Among the uses for the platform, according to Flichy, is analyzing data pulled from different wells located in the same field or operating under similar conditions. The analysis results in the development of new strategies for improving the output the lower-performing wells. "We are big proponents of virtualization," Flichy said. "It has given us a new level of business intelligence."

Taking the heavy lifting out of data integration

Forrester Research likens data virtualization to building a service-oriented architecture (SOA) for data. "Where the traditional SOA approach has focused on business processes, data virtualization focuses on the information that those business processes use," said Forrester Analyst, Noel Yuhanna. "Virtualization promises to ease the impediments to data integration by decoupling data from applications and storing it on a middleware layer."

Technology vendors are now offering data virtualization platforms that include this middleware layer along with extensions that aid in the process of organizing and filtering data. While these platforms may be structured somewhat differently, depending in the vendor, they all are designed to deliver the same result: the ability to pull various forms of data from multiple sources into one virtual location and present it to users in a consistent, easily-accessible manner.

Employing a data virtualization platform takes a lot of the complexity out of managing Big Data because it allows for leaving most data in their original systems. When users access data-typically through some type of dashboard or portal-their request for information sets off a series of commands that, in effect, searches different databases or repositories located across the enterprise to pull bits of data and combine them to form the answer to the user’s query.

Moray Laing, an energy industry consultant with business intelligence software supplier SAS Institute Inc., agrees that data virtualization removes a lot of the overhead typically associated with data integration, but he cautions against viewing it as a substitute for the type of data management that’s necessary to develop and maintain a single source of trusted data.

"Maintaining good data quality through master data management is the real way to maintain a single version of the truth," Laing said. "However, we realize that some master data management steps, such as building a data warehouse, can take years to complete. Data virtualization provides the ability to smoothly integrate many sources and forms of data into a single access point, thus reducing a lot of the heavy lifting for the scientists and engineers who use that data."

The argument for a data curator

Flichy said the way to ensure a steady flow of good quality data to a virtualization platform is to enact policies that require validating all data at its source. "Once data is validated at the source, you can be confident in telling users, ‘This is the original data as it was entered in the system.’ Then if that data is moved or altered in any fashion, it must be re-validated at the source."

Flichy believes so strongly in this approach that he has been advocating for the creation of a position known as a "data curator." The person holding this job would be responsible for making sure that all data is properly maintained before being fed to a virtualization platform.

Flichy likens the data curator to the staff that cares for pieces in a museum. "They know which pieces have the most value," he said. "With data, being aware of its value is step one. Step two is paying special attention to the most valuable pieces. If there’s a hint of degradation to my most valuable pieces of data, I have to take action to repair it immediately."

The nature of the oil and gas industry makes it the ideal place to both expand the use of data virtualization and pioneer the employment of data curators, according to Flichy.

He said that the industry, perhaps more than any other, relies heavily on large amounts of widely distributed data. Oil and gas companies also routinely populate their fields and facilities with equipment and technology from a wide range of vendors whose products are not always easy to integrate.

He said that the industry, perhaps more than any other, relies heavily on large amounts of widely distributed data. Oil and gas companies also routinely populate their fields and facilities with equipment and technology from a wide range of vendors whose products are not always easy to integrate.

"This industry also is experiencing a downturn, which has everyone pushing for greater efficiency," said Flichy. "How do you find greater efficiency? You turn to analytics, but analytics do you no good if you can’t trust the underlying data."

Virtualization could promise a quicker, cheaper path to trusted data than traditional approaches.

Sidney Hill is a graduate of the Medill School of Journalism at Northwestern

University. He has been writing about the convergence of business and technology for more than 20 years.

Quick article synopsis

Problem: Oil and gas producers are struggling to effectively manage all of the data flowing through their enterprises. The amount and types of data they must deal with is growing rapidly, while their available resources are shrinking, particularly in an industry downturn.

Solution: Data virtualization offers a fast, inexpensive method of managing Big Data, at least when compared with traditional data management approaches. Data virtualization revolves around a platform that integrating data generated across the enterprise in such a way that it at least appears to be housed in a central location. Once built, this infrastructure gives all users-no matter their position or location within the enterprise-quick, easy access to all the data they may need to do their jobs at any given time.

Action to take: Do research on data virtualization, and speak with vendors who offer data virtualization platforms. Industry analyst firms are good places to begin your research. Here are a few that have published reports on data virtualization:

Vendors who specialize in data management and business intelligence software, such as SAS, and Denodo are among those offering data virtualization solutions.

ONLINE extra

See additional stories from the author linked below.