U.S. military increasingly uses unmanned autonomous vehicles to explore buildings, defuse bombs, and more. NASA uses robotic mobile vehicles to take soil samples and send us photos from Mars and elsewhere in our solar system. They require a careful balance of power, performance, weight, and size; commanding and controlling these robots hinges on.... (See five photos and related links.)

Mention “robots” at a cocktail party, and most people will think of Rosie from the Jetsons, the quirky Star Wars droids R2-D2 and C-3PO, Arnold Schwarzenegger’s Terminator, or Pixar’s recent WALL-E movie. But, mention “robots” in a room full of military scientists, and you’ll get a considerably different set of ruminations. [For more on automatically guided vehicles, see Paul Grayson’s AIMing for Automated Vehicles blog. Also read: Swarm robotics:

Debugged naturally for 120 million years

.] While we are getting ever closer to the day when we can all have a personal Rosie to do our dishes, the reality is that most robots today are performing less glamorous — but equally important — tasks. U.S. military, for example, is increasingly using unmanned autonomous vehicles to explore buildings, defuse bombs, and more. Additionally, NASA uses robotic mobile vehicles such as Soujourner, Freedom, and Spirit to take soil samples and send us photos from Mars and elsewhere in our solar system.Technological advances are enabling developers of robots to increase processing capabilities while decreasing footprints and power consumption. As this happens, the trend is turning toward the ability to develop mobile autonomous vehicles — robots that are capable of functioning independently or with few inputs. Developing such robots requires a careful balance of power, performance, weight, and size; commanding and controlling these robots hinges on finding a computing solution that meets these requirements.

AVC’s Observer I using a Quantum 3D Thermite TL for command and control. Source: AVC

Complex designs Mobile robots come in many flavors. While it is possible to implement a mobile autonomous robot using single simple microcontrollers, the result would be too simplistic to be useful. To achieve the levels of autonomy demanded by today’s rugged military applications or space exploration, a more hierarchical architecture is implemented.An example of this hierarchical structure is the architecture for the Observer I robot from Autonomous Vehicle Corporation (AVC). This architecture implements a low-level processing solution, using dedicated 8- and 32-bit microcontrollers as well as an FPGA that performs motor speed control, sensor data acquisition (including sonar, Infra-Red, and LIDAR), and image processing from a camera.This acquired data is interfaced to a sensor fusion processor or process whose output is provided to the behavior processor. The behavior processor is required to interface to the user and establish the basic rules and autonomy necessary to fulfill the mission objectives.Robot behavior is controlled by “behavior processors” from the Quantum3D Thermite family of tactical visual computers. Unlike a desktop, or even a ruggedized laptop PC, these computers need to be as ready for abuse as they are ready to perform.

AVC’s Observer II uses a Quantum 3D Thermite 1300 for command and control.

Software at this level is generally written in C/C++, and often utilizes an embedded real-time operating system (RTOS). This processor is responsible for employing much of the autonomy decisions and employs a number of decision-making paradigms such as Baysian chains or fuzzy logic. One extremely common task for the behavior processor is to perform simultaneous localization and mapping (SLAM) using any number of sensors — including vision, LIDAR, and odometery.

Quantum 3D’s Thermite 1300 tactical visual computer.

To establish control of the robot, users connect to the behavior processor through the robot’s processing architecture using a ground station. In the case of Observer I and Observer II, AVC again turned to Quantum3D, which makes a human-machine interface (HMI) toolset called IData to create the user interface between robot and ground station.One critical feature required from a command and control interface is the existence of built-in communication capabilities that allow users to quickly and effortlessly develop the communication links between a ground station and robot. To be effective, the robot’s user interface must include data about the robot’s sensors, such as sonar data, speed, and heading — thus establishing configuration of the robot vehicle. As an added bonus, incorporating a three-dimensional model of the robot into the interface allows the user to manipulate the vehicle in specific ways.

Quantum3D’s Thermite TL tactical visual computer, the thinnest and lightest behavior processor that still delivers the requisite performance.

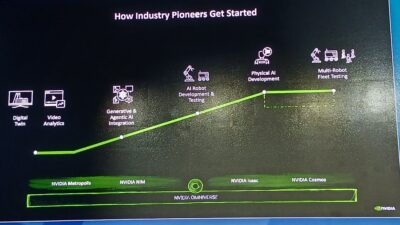

Beyond single robots Designing a single-robot system and accompanying user interface is an achievable task. However, the next step is to develop new control structures that enable a single user to control large numbers of robots from the same user interface. Achieving this capability requires careful consideration of the vehicles’ hardware and software architectures, including the autonomy software located within the behavior processor. Furthermore, a multi-vehicle distributed system will require user interfaces that permit a greater data representation than what is currently available.

AVC’s robot user interface (developed using Quantum3D’s IData).

The development of large, multi-robot systems requires developing innovative technology. As the robots in a system become more diverse, the mission possibilities for such systems will expand. Specialized robots will be able to more efficiently accomplish specific tasks — instead of requiring that all robots in a system fit a “one-size-fits-all” model. Robots that perform well at specialized tasks can be optimized, and robots from multiple manufacturers will be able to join the robot system cluster. Deploying such a system will lead to reduced overall mission deployment costs while achieving a higher degree of success.

Today’s military is increasingly using unmanned ground and aerial robotic vehicles. For such vehicles to be effective, they must combine cutting-edge command and control systems. As we move forward, we need to look beyond the single robot paradigms towards the notion of commanding and controlling multiple robots simultaneously. This includes robot vehicles that are capable of processing more and more complex data within an integrated system of specialized robots capable of working together to complete tasks.

S. Boskovich is president of Autonomous Vehicle Corp. (AVC).

— Edited by C.G. Masi , senior editor Control Engineering Machine Control eNewsletter Register here and scroll down to select your choice of eNewsletters free.