Explore the security and safety aspects of the emerging industry known as physical artificial intelligence (AI). As physical AI evolves from concept to reality, understanding its implications for human safety and security becomes critical.

Industrial physical AI insights

- Explore physical AI and how it differs from LLM AI.

- Understand the fundamental physical AI security vulnerabilities, unintentional risks and human factors, for physical AI along with cybersecurity challenges.

- Look at risk mitigation and testing framework for physical AI development.

It’s necessary to explore the security and safety aspects of the emerging industry known as “physical AI,” a term heavily promoted by Nvidia. As the physical artificial intelligence (AI) evolves from concept to reality, understanding its implications for human safety and security becomes critical.

What is physical AI? How does it differ from LLM AI?

Physical AI can be defined as an artificial intelligence system with integrated mechanical components that perform physical activities in a physical environment with real-world sensor feedback. These systems represent a fundamental departure from traditional AI systems, such as large language model AI (LLM AI), which work in virtual spaces. Unlike traditional LLM AI systems, such as ChatGPT by OpenAI or Claude by Anthropic, which operate in virtual spaces, physical AI systems perform physical tasks and activities in the physical world.

Other known terms related to these systems are generative physical AI, embodied AI and there may be other terms as well. While there are subtle differences between these systems, those distinctions are not particularly relevant for the security and safety analysis in this article. All these systems share similar challenges and risk profiles that need to be examined comprehensively.

Examples of physical AI

The examples of physical AI include fully or partially autonomous personal robots and vehicles (AVs). This article covers physical AI systems comprehensively rather than a specific industry. However, there are some references to industrial applications, personal robots and autonomous vehicles.

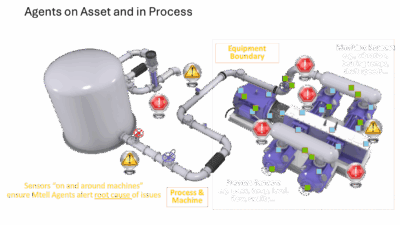

Physical AI scope extends far beyond these primary examples. Industrial collaborative robots, autonomous drones and similar systems with AI integration all fall under this category. Each of these applications presents unique challenges while sharing common security and safety concerns.

Fundamental physical AI security vulnerabilities

Physical AI systems exhibit security vulnerabilities remarkably similar to those found in industrial automation control systems (IACS). As with any protection methodology, physical AI adheres to the principle that a system is only as strong as its weakest link, and safety-security vulnerabilities can emerge from multiple interconnected components.

The complexity of these systems cannot be overstated. Each Physical AI system might contain millions of lines of code. This massive codebase creates an extensive attack surface where vulnerabilities can easily hide, making thorough security validation a challenging task.

AI processing can occur in different architectural configurations. Systems can run remotely on dedicated servers, operate in cloud environments, process locally on edge devices or employ hybrid models that combine multiple configurations. Most AI systems typically operate on server-based or cloud infrastructures, though there is a growing trend toward hybrid implementations.

Edge AI, which processes data locally on a device, may offer better privacy protection and fewer security vulnerabilities compared to remote or hybrid models. However, this approach comes with its own limitations, particularly regarding computational power to perform tasks reliably, and hence its inability to handle complex processing tasks.

The security risks facing physical AI systems include the possibility of remote hijacking, where malicious actors gain unauthorized control over the physical AI system either for ransom reasons or, attackers might focus on controlling critical safety functions or deliberately disabling essential safety features, potentially putting human users at risk.

Unintentional risks and human factors for physical AI

Like IACS systems, physical AI systems face challenges from unintentional mistakes. Similar to security vulnerabilities in IACS systems, unintentional threats may occur during software updates or system modifications, potentially introducing new risks or exposing previously unknown vulnerabilities.

Over-the-air software updates present another category of risk. Since most current physical AI systems operate on remote servers, cloud platforms or hybrid configurations, attacks using human-in-the-middle techniques could potentially occur during the update process, compromising system integrity.

Cybersecurity framework, physical AI

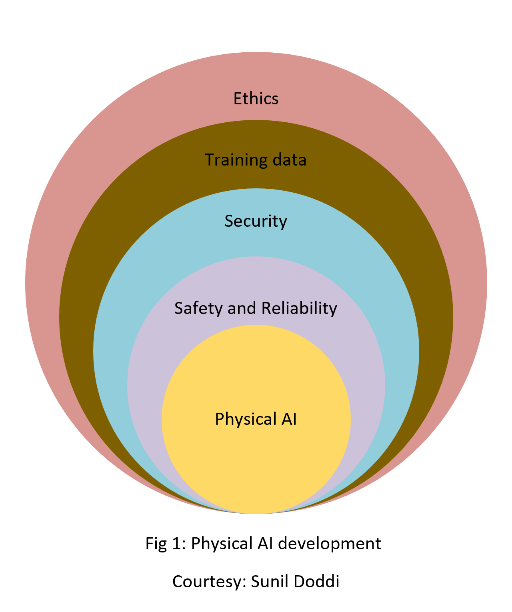

The cybersecurity approach for physical AI can be built upon established concepts from the IACS security lifecycle, which provides a proven framework starting from the initial design stage and continuing through final decommissioning. However, due to the intimate nature of how physical AI systems work closely with humans, an additional critical step must be incorporated: secure decommissioning.

This secure decommissioning requirement stems from significant data gathering and privacy concerns unique to physical AI. These systems are specifically designed to assist users at a deeply personal level, which means they inevitably gather extensive amounts of personal data about the users. Physical AI systems are more intrusive than traditional devices like laptops or smartphones due to their continuous physical presence in our personal spaces and their ability to observe and record our daily activities.

The privacy implications extend far beyond what most people might initially consider. These systems collect biometric data, including facial features and voice recordings, behavioral patterns, environmental mapping data and detailed logs of personal interactions. Simply disposing of used physical AI systems in traditional disposal facilities could allow some of the sensitive information to fall into the wrong hands and become subject to reverse engineering attempts by malicious actors.

Data gathering concerns must be addressed at the fundamental design level of these systems rather than being treated as an afterthought. The types of sensitive information collected include biometric identifiers, detailed behavioral analysis, comprehensive environmental mapping, and extensive records of personal interactions and preferences. Ethics must be considered as another critical component that should be integrated throughout the entire development and deployment process.

The human factor introduces additional complexity through what I call “trust bias.” Many people naturally tend to trust automated systems and assume the automated systems will not make mistakes, particularly when these systems perform reliably in routine situations. However, unless a task is repetitive and operates in a controlled environment, there remains a slight chance of error that could potentially harm someone in the physical world. Even with repetitive tasks, systematic design faults might still occur, creating unexpected failure modes.

Functional safety framework, physical AI

Any mechanical component that performs tasks in the physical world carries the inherent potential to harm people if it does not function as designed or if it encounters situations outside its operational design. The established IEC 61508 standard [covering functional safety of electrical, electronic and programmable systems] could serve as an excellent starting point for functional safety integration in physical AI systems, providing a systematic framework for identifying, assessing and mitigating safety risks.

However, traditional safety approaches are not sufficient for the dynamic environments in which physical AI operates. An adaptive safety approach proves more practical than conventional traditional safety measures, which typically rely on fixed failure states and predetermined response protocols. An adaptive safety approach becomes essential because physical AI systems must operate in unpredictable and highly changing, adaptive environments where static safety rules may prove inadequate.

Recent viral videos circulating on the internet have demonstrated these challenges clearly. In one notable incident, a robot became highly unstable, and an investigation revealed that the primary cause was its programming instruction to maintain stability at any cost; the programmers did not think that it could create dangerous scenarios. This example perfectly illustrates why dynamic safety assessment approaches are more crucial for physical AI than traditional fixed safety protocols.

Another recent video showed a robot stepping on a child’s foot, apparently because the system failed to properly recognize or appropriately respond to the presence of a human. These real-world incidents highlight the critical importance of sensitive sensor detection and appropriate adaptive safety response protocols in physical AI systems.

A simple shutdown function in physical AI systems is insufficient. Because humans interact with these systems in unpredictable ways, one shutdown mechanism may not be adequate, unlike in a typical process plant, where a de-energized state is fail-safe in most cases. A “de-energized” state in physical AI may not equate to a safe shutdown.

Privacy and ethics implications of physical AI

When considering privacy concerns related to physical AI, we should not assume that camera vision represents the only privacy threat. The absence of visible cameras does not mean that users cannot be observed or monitored. Advanced sensing technologies like LiDAR can create detailed three-dimensional maps of environments and detect human presence and movement. Similarly, WiFi sensing technology can analyze radio frequency patterns to detect and track human movement within rooms, even through walls and other obstacles.

These sensing capabilities mean that Physical AI systems can maintain comprehensive awareness of human activities and behaviors even when traditional cameras are not present or are disabled. This creates unprecedented challenges that existing privacy frameworks may not adequately address.

The comprehensive nature of data collection by physical AI systems extends far beyond what most users might expect or consent to. These systems can track daily routines through movement analysis, record conversations and build detailed profiles of personal preferences and behaviors over extended periods.

Physical reasoning and AI limitations

Physical reasoning can be defined as a cognitive process that involves how objects behave in the physical world. Understanding physical reasoning represents one of the main challenges facing current physical AI implementations. At the human level, this process involves innate physical understanding that humans (and even many animals) have naturally. Although the AI systems use advanced predictive and other techniques, they do not perceive things as we humans naturally do. Additionally, we may never know how the AI systems truly perceive these scenarios, though they keep getting better with new technologies.

For example, when a car turns and disappears from view, we instinctively understand that the car continues to exist on the other side of the road. From an AI system’s perspective, however, the car may have simply disappeared when it moved out of the sensor’s range. This limitation in understanding object permanence represents a fundamental challenge in physical reasoning for AI systems, which can lead to inappropriate responses or behaviors when objects move out of direct sensor range.

AI hallucinations are a critical challenge

AI hallucinations represent perhaps the biggest challenge facing physical AI implementation today. These hallucinations are erroneous outputs generated by AI systems that can create significant safety challenges with real-world consequences. When AI systems mistake objects or mishandle situations due to hallucinations, the results can be dangerous in physical environments.

AI hallucinations occur primarily due to poor training data quality, including various forms of input bias that can skew the system’s understanding of physical reality. Inadequate training data, biased sampling data sets, or insufficient diversity in training scenarios can contribute to an AI system’s tendency to hallucinate and misinterpret real-world situations.

Risk mitigation and safety strategies

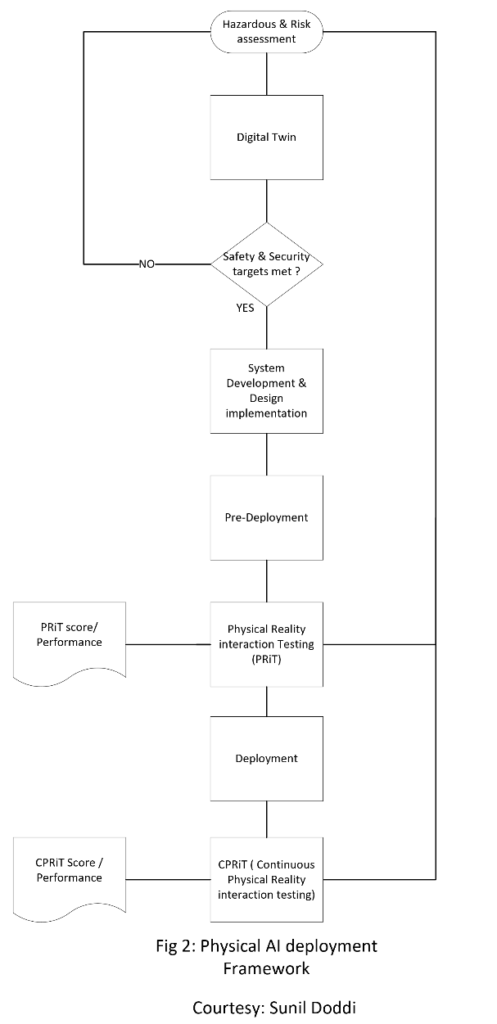

Addressing these challenges requires a comprehensive approach that begins with establishing human evaluation metrics and realism scoring systems for any physical AI implementation. Training AI software in carefully designed virtual environments is the crucial step, and these virtual environments should have their own measurable physical reality score (PRS) to ensure they adequately prepare AI systems for real-world operation. Digital twin technologies can provide valuable tools for creating these training environments.

The software development process must prioritize personnel safety from the earliest design stages rather than treating safety as an add-on feature. This means incorporating safety considerations into fundamental system architecture decisions and maintaining safety as a core requirement throughout the development lifecycle.

A human operator center should maintain responsibility for emergency response, operating 24/7 with qualified personnel capable of immediate intervention when physical AI systems encounter problems or unusual situations. This human oversight provides a critical safety net when AI systems reach the limits of their capabilities or encounter scenarios outside their training data.

Since training data inevitably involves real people and their personal information, ethical considerations must be integrated with security and privacy concerns throughout the development process, with appropriate consent mechanisms and privacy protections. The entire system development process should begin with ethical frameworks rather than attempting to add ethical considerations after the technical development is complete.

Future standards and regulatory needs for physical AI

The unique challenges presented by physical AI necessitate the development of comprehensive new standards that address the issues identified above. Current standards and regulations, while valuable, were not designed comprehensively for safety and security of physical AI. Development of such standards will require collaboration between industries, privacy advocates, ethicists and regulatory bodies to ensure comprehensive coverage of all relevant concerns.

The rapid advancement of physical AI technology demands that these frameworks should be flexible enough to accommodate technological advancements. Physical AI presents us with a transformative technology that improves human life, but it also introduces unprecedented risks.

Testing framework for physical AI risk

Before deploying any physical AI system, it should undergo a physical reality interaction test (PRiT), analogous to a site acceptance test (SAT) in IACS environments. PRiT should include a scoring system, or a “physical realism score,” modeled on a risk-matrix framework, with defined quantitative or qualitative criteria. Initially, the industry may rely on qualitative or semi-quantitative scoring; over time, fully quantitative metrics may be developed. PRiT results must directly inform the system’s risk assessment, ensuring that identified vulnerabilities are addressed before release.

Once deployed, continuous physical reality interaction testing (CPRiT) should occur, and CPRiT feedback should be incorporated into the system design. CPRiT performance data should then feed back to the digital twin for further optimization. This closed-loop feedback process enables ongoing optimization of reliability and safety.

Sunil Doddi is a Certified Automation Professional, Certified Functional Safety Expert and Cybersecurity Fundamental Specialist. Edited by Mark T. Hoske, editor-in-chief, Control Engineering, WTWH Media, [email protected].

Keywords

Physical AI, safety, cybersecurity

Consider This

How are you integrating safety and cybersecurity risk of industrial physical AI use?

Online

Also from Sunil Doddi and Control Engineering, see:

https://www.controleng.com/ai-and-machine-learning

https://www.controleng.com/process-instrumentation-sensors/process-safety