Recent trends in machine vision include easier machine vision model training, faster robot-machine vision setup, and more compact and energy efficient machine vision cameras.

Trends in machine vision product insights

- EZ Automation Systems’ EZ Eye technology reduces image grading time across multiple operators when training deep learning systems for machine vision applications.

- ABB Robotics OmniCore EyeMotion is an intelligent, hardware-agnostic vision system that gives ABB robots ability to see and understand the world for 2D and 3D vision-based applications.

- Emergent Vision Technologies Eros 10GigE cameras are small, low-power, adjusts automatically to network speed and are certified to GigE Vision 3.0 and RDMA standards.

Easier machine vision model training, faster robot-machine vision setup, and more compact, energy efficient machine vision cameras are among recent trends in machine vision technologies, according to information from the companies involved.

Easier machine vision model training

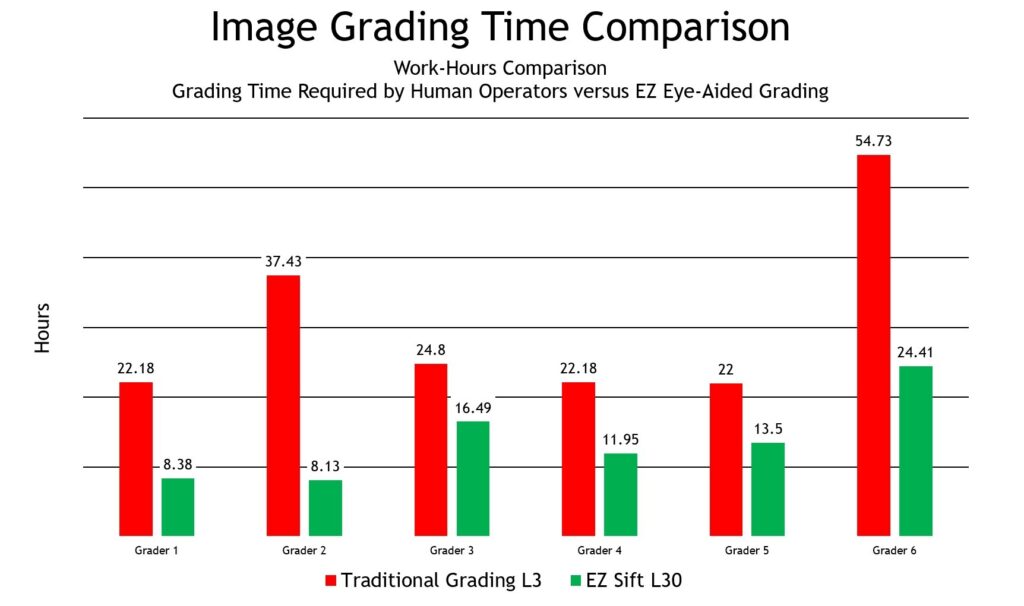

An automation platform accelerates artificial intelligent training for machine vision inspection applications. Automation provider EZ Automation introduced the latest benchmark for the efficiency gains that its EZ Eye automated inspection can deliver for quality assurance operations. Analysis of customer project data showed the technology enabled a 55% reduction in the work hours required to train deep-learning systems for challenging inspection applications while enhancing the accuracy of inspection operations, particularly those for foreign matter detection (Figure 1).

An algorithmic tool significantly accelerates the training process for deep learning-based vision systems. Traditional image grading requires human operators to manually sort through extensive image datasets to assign a pass or fail designation to each image. This time-intensive process becomes exponentially more challenging when training systems to detect rare objects that may occur in less than 1% of inspected items.

The tool applies cyclic optimization principles to identify patterns in human grading behavior, allowing the system to automatically categorize similar images and focus human attention on grading novel or ambiguous cases. This intelligent sorting capability has proven particularly effective for small object detection scenarios, where testing has demonstrated the technology’s ability to maintain model accuracy above 96% while reducing the time that human operators spend grading by as much as 97%.

The platform is said to be technology agnostic, said to integrate with virtually any machine vision or process control system, enabling enterprise-level integration through programmable logic controller (PLC) communication for real-time status and results exchange. The platform accommodates client-defined tolerances to address inspection applications across a broad range of manufacturing sectors, such as medical device production, food processing, semiconductor fabrication, and pharmaceutical production.

Driven by more stringent regulatory requirements and demand for product traceability, quality assurance operations are showing more interest in AI-based machine vision inspection systems, the company said. The platform also generates comprehensive documentation appropriate for regulated industries that require detailed compliance records.

In 2018, when the company developed the original deep learning-based system for a customer who needed to rapidly deploy a foreign matter inspection solution. The six-month development timeline, achieved before deep learning became widespread in industrial applications, demonstrated the company’s capability to deliver advanced solutions quickly.

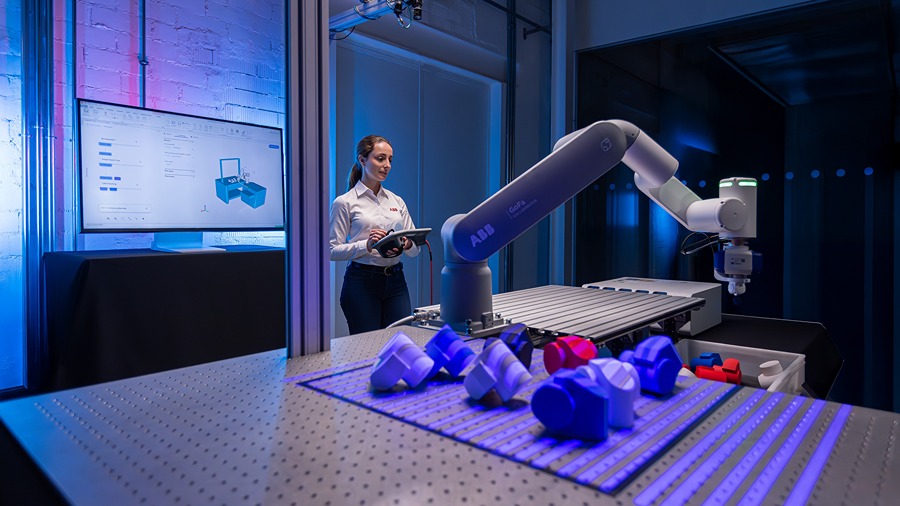

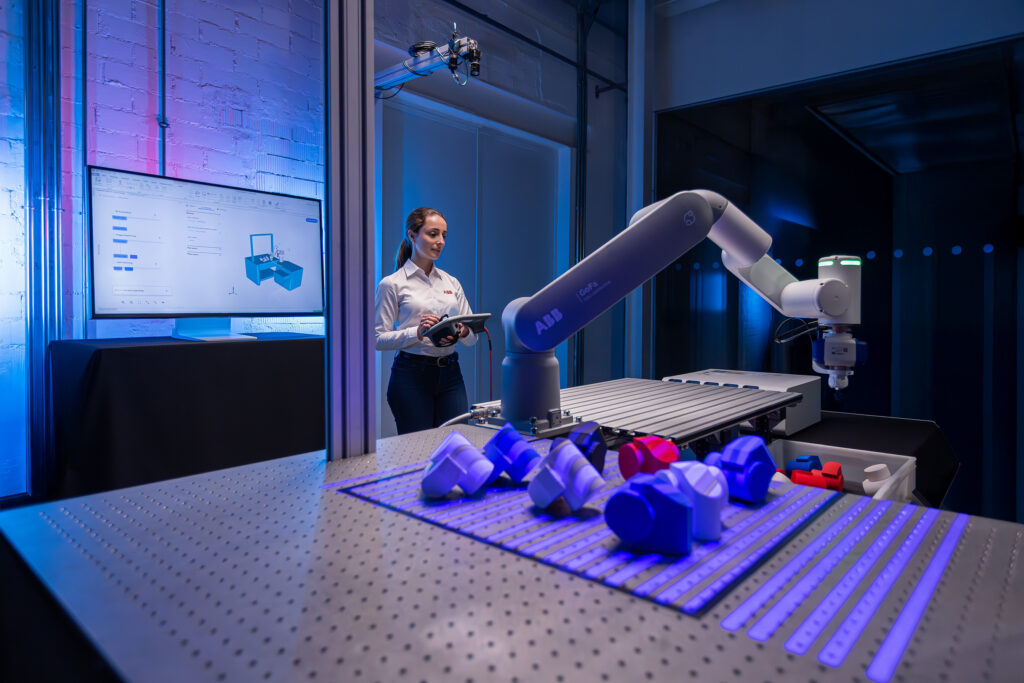

Faster robotic adjustments for machine vision

An intelligent, hardware-agnostic vision system gives robots the power to see and adapt in real time with advanced perception skills, through any camera or sensor, in complex applications.

Designed for users of all skill levels with a simple web interface through drag and drop sensors or cameras, the new software provides fast image acquisition and recognition. The ABB Robotics OmniCore EyeMotion vision system (Figure 2) is integrated in ABB’s software suite, complementing the ABB Robotics RobotStudio. The tools enable rapid set-up and deployment and reduces commissioning time up to 90%, compared to custom solutions.

One step enables any robot to see and understand its environment, advancing autonomy and versatility for many 2D and 3D vision-based applications, the company said.

More compact, energy efficient machine vision cameras

A machine vision camera is said to be the smallest, lowest-power 10GigE camera in the world. The Emergent Vision Technologies Eros 10GigE camera series (Figure 3) includes “Thirty-nine new cameras across color, mono, SWIR, polarized, and UV sensors,” said John Ilett, president and founder of Emergent, at a “price more commonly seen with 2.5 or 5GigE cameras. This opens the door to many applications that were previously limited by not only GigE Vision camera performance, but cost, heat generation, or space requirements.”

Machine vision applications across several industries call for multiple cameras generating high-speed, high-resolution imagery in real-time, such as sports broadcasting, manufacturing inspection, volumetric capture/virtual reality applications, and AI-powered computer vision and deep learning. These can be built in Microsoft Windows or Linux systems using company software that uses Nvidia GPUDirect to send the data directly to the GPU memory, without copies and without latency. In multi-camera setups, this allows computationally intensive processes such as real-time compression via H.264/H.265 to be mapped directly to the GPU efficiently, with low loss and without detours.

Mark T. Hoske is editor-in-chief, Control Engineering, WTWH Media, [email protected].

Keywords

Machine vision, AI-enabled machine vision, robotic machine vision, small machine-vision cameras

Consider this

Are you applying the latest technologies to your industrial machine-vision applications?

You also might like

https://www.controleng.com/mechatronics/vision-and-discrete-sensors