General Motors and three automation providers explained how integrated technologies are achieving robotic paint repairs on a moving high-volume automotive paint line, at Automate 2025.

- Automate 2025 application session called “The final frontier of automation in a high-volume automotive paint shop: Robotic paint repair on a moving line,” discussed integration of robotics, motion control, machine vision and abrasive technologies.

- Experts from General Motors, 3M Abrasives Division, Encore / Inovision Inc. and Fanuc America explained the automation and integration involved in the automated paint repair application.

- Additional questions, answers on robotic-machine vision integration followed the formal presentation, as explained in a Control Engineering article.

General Motors and three automation providers, 3M, Encore / Inovision Inc. and Fanuc America, explained how integrated technologies are achieving robotic paint repairs on a moving high-volume automotive paint shop, at Automate 2025, by the Association for Advancing Automation (A3). The application, called the first of its kind, was described at the conference in Detroit, May 12-16. The related show had more than 875 exhibitors, more than 40,000 registrants, and more than 140 conference sessions on robotics, machine vision, artificial intelligence and other industrial automation topics.

The application session called “The final frontier of automation in a high-volume automotive paint shop: Robotic paint repair on a moving line,” discussed integration of robotics, motion control, machine vision and abrasive technologies, as described by:

- Ryan Odegaard, global director paint, General Motors

- Marcus Pelletier, global director R&D, 3M Co.

- Tom VanderPlas, senior staff engineer, paint, Fanuc America

- Gary Gagne, product development manager, Encore / Inovision Inc.

Michelle Frumkin, senior global robotics portfolio manager, 3M Co. Abrasive Systems Division, coordinated audience questions and answers after the presentation (Figure 1).

Application needs: Paint repairs on a moving automotive line

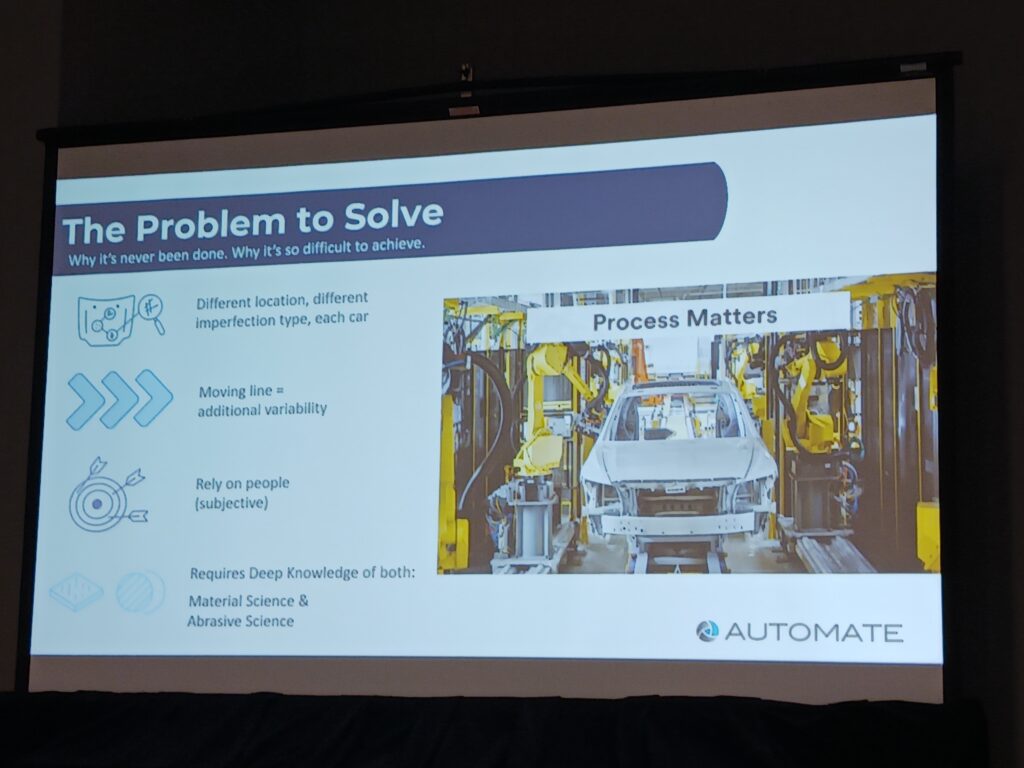

Odegaard said the finishing system for Cadillacs may create certain imperfections that require understanding the inspection process to catch everything (Figure 2). Visual classification of paint quality with humans is subjective. But data is needed to prevent future imperfections. The process matters. Repairing paint on a high-volume moving automotive paint line is revolutionary, he said.

Pelletier at 3M said the effort combined external and internal processes to develop this solution for General Motors. Sanding is variable, because of wear and movement, with induced problems. Today it is ergonomically challenging, and human performance decreases through the day, even for the most-expert human workers. Automation leads to consistent, higher-quality repairs and improves efficiency for clearcoat, primer and e-coat applications.

Four things make the application work: material and abrasive science, robotics, integration and vision, and partnership among all project participants.

“At 3M, we don’t have a moving automotive line to use, so we couldn’t develop this in isolation,” Pelletier said.

Among 3M contributions to the effort were software that applies recipes in real time, tooling for pressure, force and speed for each recipe, along with internal vision technologies.

The software measures results, validates and correcting assumptions ahead of time. It’s low code or no code software. The robot moves and bends as needed in real time, with the robot controller every 10 milliseconds instruction for precise control. Tooling sensors provide feedback to modify trajectories as needed, Pelletier said. It’s a multivariable process for appropriate speed and motion control.

Robotic advancements for paint repair

VanderPlas at Fanuc brought improved robotic technologies to the application. A modular rail system moves a 6-axis robot to keep robot synchronized with the moving automobile, up to 1,500 mm/sec for a 1,200 kg robot.

Advanced tracking offers precision. Painting experience is applied to sanding also. An encoder connected to a conveyor supplies 500 pulses per inch to controllers and programmable logic controllers (PLCs), to provide line tracking from the robot to conveyor.

Streamed motion provides robot control via an external controller (from 3M in this case for 6 axes in the application; Fanuc handles the seventh).

Infrared (IR) calibration is used because there are no pre-defined paths; topography varies by imperfections. The position has to be reachable and using a feed that’s attainable. Rail tracking follows the vehicle down the line. When last position runs in recipe, the robot waits for next imperfection.

Robot guidance simulation includes cycle time, reach, paint and handling applications. It’s used in the quoting phase of the project to validate the number of robots and optimal position. Simulation tests scenarios for optimal positioning and robot choice. An inspection simulator is connected to the robot simulator for validation and tweaks its position.

Dual check safety (DCS) provides ISO and IEC certified safety functions monitoring servo motors for position and will shut down the process if needed. Self-diagnosis is performed periodically. Safety I/O can turn on and off regions in the workcell if the robot is in another area for restocking pads.

Elements of vision system application

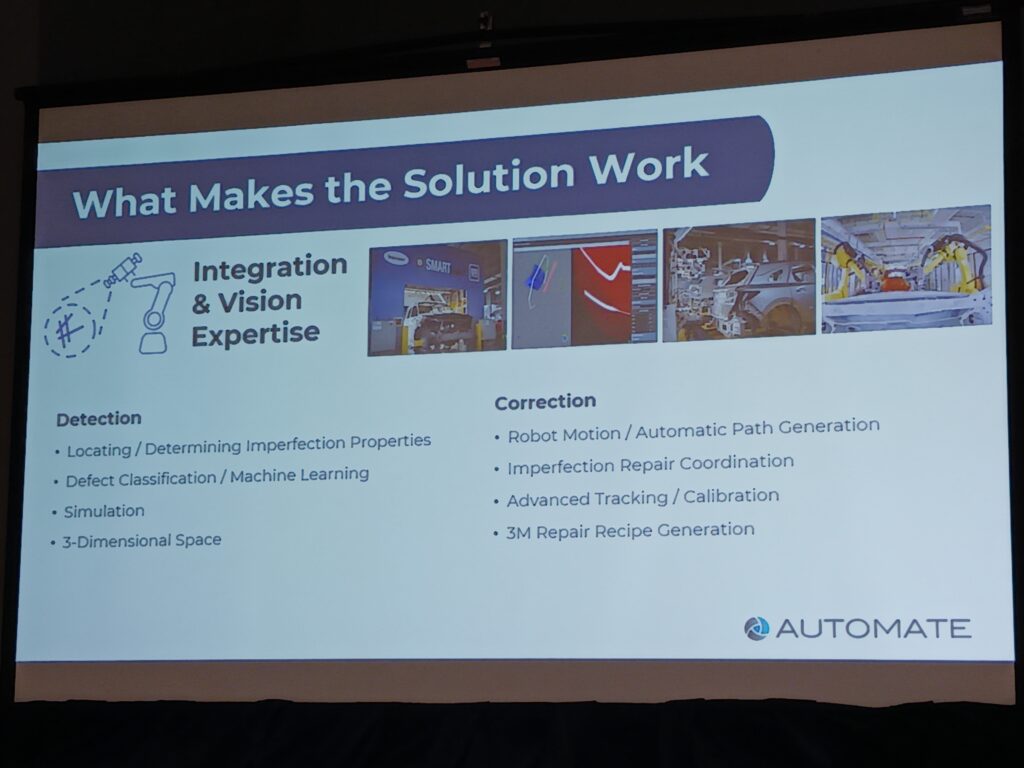

Gagne at Encore / Inovision worked on the integration and vision expertise for detection and correction, locating and determining imperfection properties. Defect classification and machine learning works with a large database of defects and properties, processing 10GB of images in 60 seconds. Simulation builds models in 3D space. Vision offers correction robot motion and automatic path generation, imperfection repair coordination, advance tracking calibration and 3M’s repair recipe generation.

Each vehicle has an infinite number of possible repair locations, sizes and types. All repair path generation is on the fly for each vehicle. The vision system assigns how tools address defects and coordinate movement efficiently (Figure 3). The system applies input from encoders, vision and software tools to calibrate and use 3M’s repair recipe generation.

Questions, answers on robotic-machine vision integration

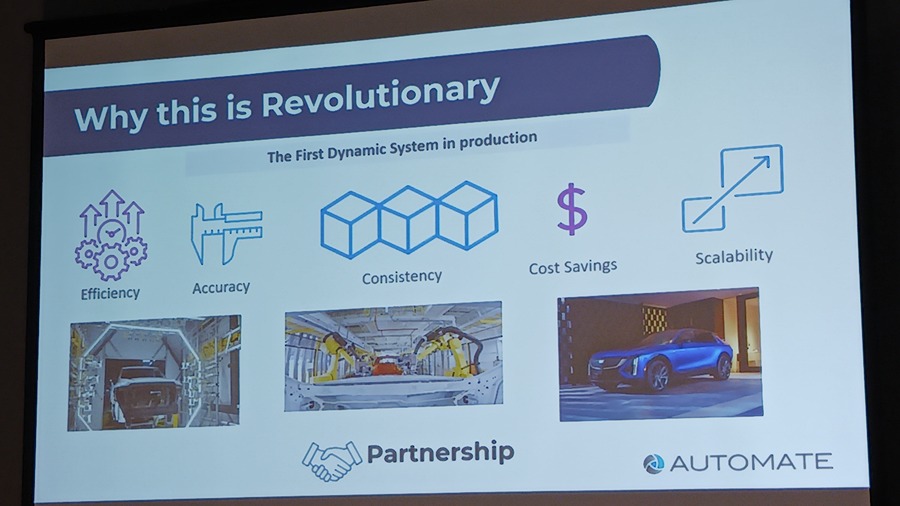

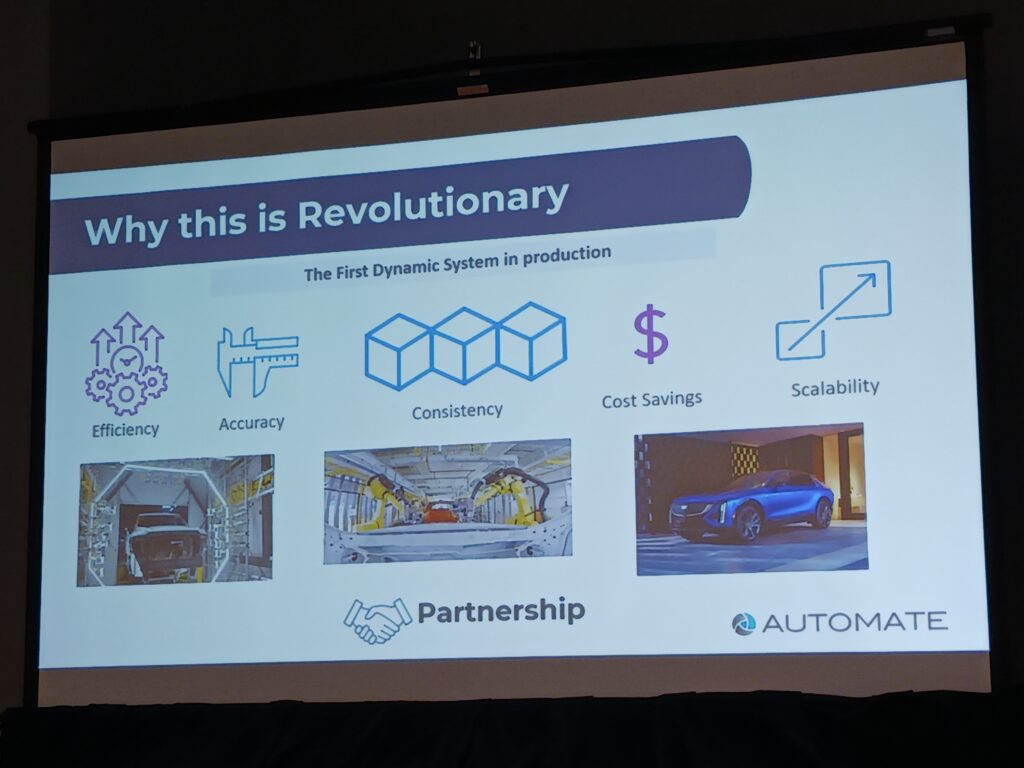

Odegaard explained why the application is revolutionary (Figure 4). It uses data sets to improve efficiency and accuracy, consistency, cost savings and scalability. It’s the first dynamic system being used in a production setting. Because paint shops have long lifecycles, the system was designed for scalability.

Odegaard said a key to project success has been multiple organizations partnering to overcome challenges.

VanderPlas said they set expectations from the start “that we don’t know we don’t know.” The team realized that in the beginning it was unclear how many spots would need repair on a vehicle or what paths the tools would take.

Gagne said the machine vision part of the application had been working for four or five years on inspection machine learning, model training, databases on defects to determine the size, type of defect, texture of paint and other characteristics giving a robot controller the knowledge to replace the human eye.

Odegaard said the application was rich process data that could be used to improve future outcomes.

Gagne added that imperfections were wiped out with human repairs. Now imperfections are logged and eliminated with improvements now to improve quality and consistency in the systems delivered.

Cost savings and return on investments are unclear, Odegaard said, because the project is still iterating

Further addressing the uniqueness of the application, Pelletier said other systems can identify imperfections, but not where in real time. Or identify where but not what was needed for correction. This system integrates all of that, he said.

Mark T. Hoske is editor-in-chief, Control Engineering, WTWH Media, [email protected].

CONSIDER THIS

Are your complex motion control applications adequately integrating machine vision and robotics?

ONLINE

Find more coverage by searching “Automate 2025” at www.controleng.com.

Learn more at

https://www.controleng.com/mechatronics/vision-and-discrete-sensors