Complex augmented reality (AR) could soon find everyday use on assembly lines, in operating rooms and in the classroom as technology advances.

Machine vision technologies are being used to make possible complex augmented reality (AR) systems on earth. AR isn’t just for video games and entertainment anymore. AR could soon find everyday use on assembly lines, in operating rooms and the classroom.

Soon, NASA will be using smart glasses equipped with augmented reality video in space. But before that can happen, those systems are being tested in humankind’s other unexplored frontier, the bottom of the sea.

AR lets users add layers of digital information over a visual display of our physical world. AR adds sounds, videos, and graphs to real-time video, or overlaid onto transparent lenses.

Users experience a changed perception of reality, hence the term, augmented reality. AR can be displayed on a wide range of devices, including screens, glasses, handheld devices, mobile phones, and head-mounted displays.

How augmented reality works

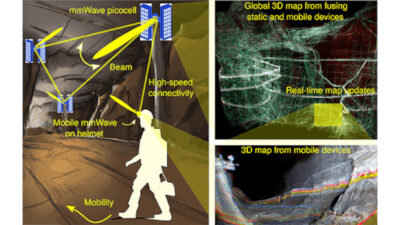

Simultaneous localization and mapping (SLAM) technology uses machine vision systems to create maps of a user’s environment. This technology allows 3-D objects to be recognized and AR elements to be embedded onto the map. Cameras and sensors collect data about the user’s interactions. When used with CMOS sensors, global shutters achieve very high frame rates and maintain high resolutions. All this information goes to a CPU and other systems for processing.

A miniature projector takes data from the sensors and projects digital content onto a surface for viewing. Some AR devices have mirrors to ensure proper image alignment. Depth tracking is used to calculate the distance from the camera of the objects in sight and to ensure that the objects are placed correctly on the display.

Testing AR on the ocean floor

NASA’s Johnson Space Center is using the Aquarius Reef Base underwater habitat in Key Largo to try out a new high-resolution, see-through head-up display. This display, integrated into a Kirby Morgan-37 dive helmet, allows divers to have a high-resolution display of sector sonar imagery, text messages, diagraphs, photographs, and augmented reality video.

It’s like having sight restored to the blind for divers who must often work in zero visibility conditions. NASA astronauts and technical personnel were able to conduct training missions and determine the potential for a similar system that would be integrated into their extra vehicular activity (EVA) spacesuits. Astronauts would benefit most in situational awareness, safety, and effectiveness.

This article originally appeared in Vision Online. AIA is a part of the Association for Advancing Automation (A3), a CFE Media content partner. Edited by Chris Vavra, production editor, CFE Media, [email protected].