Eelco van der Wal, managing director of the Gorinchem, Netherlands-based industrial control organization PLCopen, is on a crusade—one that impacts applications and, hence, the part of software reliability control engineers are responsible for. His efforts, though, will only provide part of the solution.

Sidebars: ‘We don’t want to test in quality; we want to build quality in.’ To build trustworthy software, scrutinize the operating system

Eelco van der Wal, managing director of the Gorinchem, Netherlands-based industrial control organization PLCopen, is on a crusade—one that impacts applications and, hence, the part of software reliability control engineers are responsible for. His efforts, though, will only provide part of the solution.

Reliable control software requires a firm foundation, something only vendors can provide. With programs expanding from a few to thousands or more of lines of code, van der Wal sees ballooning costs and rising risks. He also notes that changes, before or after an application is deployed on a controller, are almost guaranteed.

IEC 61131-3, an effortto standardize programming languages for industrial automation, provides definitions for a structured programming environment, including function blocks.

To key to reducing the costs and the risks, according to van der Wal, is structured programming via the interface specified in the global industrial control programming standard IEC 61131-3. While the use of structured programming represents a change from the traditional approach, van der Wal says the payoff can be improved software reliability and more. When multiple projects are considered, the benefits are even greater, he says.

“If there’s a certain overlap between the first project and the second project, you will see a dramatic decrease in cost and time and risk factors, and an increase in [software] quality,” he says.

The cost savings vary, but van der Wal indicates 40% is a good figure to use. Thus, more reliable software may also be less costly to produce.

A look shows what control engineers can do to improve software reliability also reveals some of the tools that exist to make the job easier. In addition, discussions with controller manufacturers reveal how they’re responding to coding challenges by improving their own methods for ensuring software reliability.

Function block fundamentals

While advocating a standards-based, structured programming method, van der Wal notes that the benefits aren’t free and they do require a change in the traditional way of developing code. “You have to have a software development philosophy in place,” he says.

For control engineers, this means adopting a structured approach. Problems have to be decomposed into individual components, which then can be handled by function blocks, which have defined inputs and known outputs.

Function blocks are designed using one of the graphical or textual languages in the IEC 61131-3 standard. Internally, they have defined variables and data types, such as integer, real, Boolean, or array. With the function block designed, it is simulated off-line. Then, when it meets requirements, it is integrated with other function blocks into the final application, which is then deployed and maintained.

It’s the function blocks that give rise to the increase in reliability and the decrease in cost, says van der Wal. The former happens because the function blocks are relatively small and simple. Thus, it’s possible to exercise them completely in tests and understand what exactly what they’re doing. The cost reduction is a consequence of being able to reuse the finished function block in another project. Other savings result from being able to make changes more easily, and thus maintain an application for less.

A structured programming approach does require more upfront work, particularly on the first project. It also means it’s not possible to dive right into a problem, no matter how tempting that is. The control problem partitioning task, however, is helped by such tools as the sequential function chart.

Model-based design tools

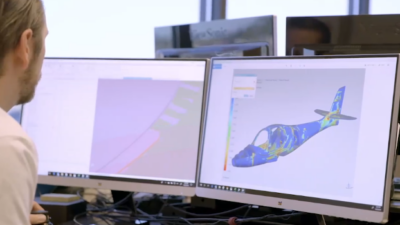

Mathworks, the Natick, MA, based privately held firm, has a different but conceptually similar approach for reliable control system software. Along with technical computing software, the company develops and supplies model-based design tools, which are the basis for its control software. Its model-based approach to developing control systems, like that of structured programming, requires the problem be defined and broken down into smaller steps. With that done, the company’s Simulink process modeling software can create function block analogs.

Paul Barnard, Mathworks’ marketing director for control design automation, explains that once a process is modeled, the software can be used for code development. “You can graphically design or describe an algorithm and through automatic code generation generate C code that then is compiled and runs usually on some type of embedded target.”

National Instruments’ LabView takes a similar approach. The benefit of graphical development methods is that they abstract the control problem and let a single individual developer manage more functionality. Like compilers that produce machine instructions, automatic code generation removes the human element from the process. The resulting code is more uniform than that produced by people—and might be more reliable, although that’s not guaranteed.

Brett Murphy, Mathworks’ technical marketing manager for verification, validation and test, notes that Mathworks is aware that automatic code generation is not infallible and provides ways to test the generated solution and the models it springs from. “We have modeling checking standards, for example,” he says.

Other tools provide formal analysis of both model and code, thereby proving if it’s possible to reach a particular failure mode. Such tools allow checking at the component or model level, but they can’t at present be scaled up to large systems.

No machine is an island

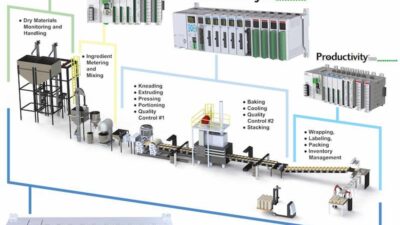

That lack of scalability is unfortunate because applications run on platforms and often on systems that are part of a larger network. The combination brings with it an entirely new set of issues that can affect software reliability in areas that are sometimes beyond a control engineer’s domain.

Roy Kok, product marketing manager for Proficy HMI/SCADA software at GE Fanuc Automation of Charlottesville, VA, notes that the platform operating system (in this case, Microsoft Windows, NT or Vista) needs to be up-to-date and, if possible, fully patched. But applying a patch before it’s had a chance to be seasoned in non-critical areas can adversely impact software reliability, he says. So vendors like GE Fanuc have to supply the expertise to know when the patch, like the porridge in the fairy tale, is just right.

Likewise, other software and components can hurt an automation application– even if there’s no problem initially. “Updates of ancillary software can have a direct impact on the operation and reliability of the primary solution,” says Kok.

While software reliability can be improved by removing non-critical or untested extras, that’s not enough, KOK continues. What’s needed is a diagnostic that can spot changes in third party components and elsewhere.

For example, a production line may be down and it may be possible to bring it back up if a piece of code referencing a limit switch is bypassed. There’s a tremendous incentive to make the change immediately, bypass the switch, and fix the problem software later. Kok says it’s important that the temporary fix isn’t allowed to slip in unnoticed, become permanent, and cause a failure some time afterward. GE Fanuc’s change management system can do periodic and automatic scans of controller software, compare the results to a backup file, recognize the change, and thereby document it.

Improving software reliability requires changes from programmers and software suppliers, but the payoff could be greater reliability, higher quality, and lower cost.

Trust, but verifyknown good configurations. “This allows the system to detect if an untested version of a component, including a third party component, has been installed,” says Harding.

Eric Kaczor, product marketing for engineering software products at Siemens Engineering and Automation, notes that end users are confronted by many different pieces of software. That situation makes version control and version compatibility difficult to achieve and manage. One technique that’s used freezes time—at least as far as software is concerned—to reduce the problem. Thus, Siemens will offer a single “golden DVD” that contains software needed by users for its equipment. Kaczor says that the software in such a setup will lag the most current versions somewhat, but the combination offers something the most recent software might not be able to match. “It’s all guaranteed to work together.”

For its part, Kaczor says that Siemens tries to ensure that software as-shipped is compatible with any combination of all released pieces of hardware. The company does this through extensive regression testing, trying each new prospective software release on rooms full of equipment. Such a check is thorough, but not 100% exhaustive, because it’s difficult and perhaps impossible to do verification against all third party software.

Opto 22 is a Temeucula, CA-based, hardware maker of I/O, controllers, and software. Like others, the company does regression testing before releasing software to customers. Roger Herrscher, senior engineer in the Opto 22 quality assurance group, says the company recently reorganized its QA group to better test its software against the range of possible hardware. Previously, the QA team had been composed of engineers dedicated solely to product testing. Now Opto 22 has brought software and hardware designers into the group. The effort increases detailed product knowledge, improves testing, and ultimately quality.

Such efforts come with a caveat with regards to software, because of its nature: “When using software, there’s almost always more than one way to accomplish a given task,” notes Herrscher.

A natural tendency, he says, is for the designer of software to have a preferred way to do things. Thus, the designer might not consider and exercise all the avenues a user might follow. By having someone else design the test function, that problem can be circumvented, Herrscher says.

Like other suppliers, Schneider Electric of Rueil Malmaison, France ,also does regression testing with every release of its Unity Pro software package. In particular, Rich Hutton, automation product manager for Schneider Electric, notes that the communications layer that handles traffic is key to reliability and consistent operation. He notes that diagnostics can catch memory failures and other problems, but adding them takes resources. If done to an extreme, diagnostics can actually impact the reliability of the main application, he says.

While Schneider Electric works to make its software reliable, the company is also taking steps to ensure that the programming done by end users to create a desired application is also reliable. Hutton notes that the company’s development tool supports five IEC 61131-3 languages in which to program. This is done in part because it helps Schneider Electric’s customers do their part to ensure software reliability.

A choice of standard programming languages “improves the reliability of the designers, because they can utilize a standard programming style,” says Hutton. “That makes their code writing usually more reliable.”

Author Information

Hank Hogan is a contributing editor for Control Engineering.

‘We don’t want to test in quality; we want to build quality in.’

Controlling software quality in the age of open systems and rapid change is a challenge for all developers. On the last day of the Honeywell User Group meeting in Phoenix in June 2007, user questions turned to software upgrades and patch management. Honeywell vice president of technology DonnaLee Skagg addressed the crowd and talked about continuous improvement.

“When you’re talking about software quality, you’re talking about three things. The first thing you want to talk about is preventing defects from happening in the first place,” she said. When you’re developing any software program, there are risky elements and there are other elements that are not so risky, Skagg explained. “We want to identify and understand the riskiest elements and test those out.

“Second,” said Skagg, “if there are defects, you don’t want them going out. You want to find them and patch them internally, before the software goes out to the customer.”

Third, said Skagg, “you want to look at any defects you do find and not just fix them. You want to see what’s causing them and do root cause analysis to make sure they don’t happen again elsewhere.”

Skagg said Honeywell is moving to an iterative software development strategy that will deploy over next year and a half. Iterative development means software functions are developed, evaluated and tested more rapidly, and earlier in the lifecycle of a new release. Customers are brought in sooner to review the functionality and, testing is done earlier so the results can be fed back into the other functions being developed.

“Ultimately, we don’t want to test in quality; we want to build quality in from the start. An iterative process allows us to do that,” Skagg said.

To build trustworthy software, scrutinize the operating system

While control engineers and vendors struggle with growing software complexity and therefore decreasing reliability, there are signs that the basis for everything– the operating system – is being increasingly scrutinized.

The second Tuesday of every month now comes with security patches from Microsoft, with some applying to core components in the operating system. Not installing patches is less and less of an option, due to increasing connectivity and concerns about cyber security. Vendors must therefore do regression testing of patches in a timely manner.

Stephen Briant, product manager for the FactoryTalk services platform from Rockwell Automation says that Microsoft doesn’t make its core living in industrial automation. Thus, he says, Microsoft’s software requirements aren’t necessarily aligned with those of control system users. Using a non-Microsoft operating system, he says, could improve overall software reliability– but the trade-offs have to be carefully considered.

Briant also notes the high reliability of today’s controller software overall, and predicts it might have a far reaching impact: “The market is going to trend toward the need for everything else to operate at the same level of integrity as that control solution,” he says.