For many years, chemical and process industries have successfully employed statistical process control (SPC) as a tool for monitoring and maintaining the consistency and operation of process systems. Traditional SPC approaches involve plotting trends of important quality parameters and ensuring that these trends do not violate pre-specified control limits.

AT A GLANCE

Component analysis

Error prediction

Multivariable controls

Application studies

Consistency improvement

Sidebars: Principal component analysis

For many years, chemical and process industries have successfully employed statistical process control (SPC) as a tool for monitoring and maintaining the consistency and operation of process systems. Traditional SPC approaches involve plotting trends of important quality parameters and ensuring that these trends do not violate pre-specified control limits. Despite the success of this approach, several limitations affect its ability to provide accurate monitoring of batch processes.

For example, because many variables may be recorded in SPC, these variables require multiple charts to be interpreted, which can be difficult. In addition, because steady state is typically not achieved in batch operation, deviations may be caused by interactions between variables, which may not appear on SPC charts that monitor the variables as independent measurements.

Recent application studies have indicated that multivariate statistical technology can provide some support in maintaining consistent operation in complex batch processes. Multivariate statistics relies heavily upon the statistical routines referred to as principal component analysis (PCA) and partial least squares (PLS). PCA is a technique designed to extract major features within a data set. In this way, PCA can identify several statistics that provide important information regarding process plant operation. (See “Principal component analysis” sidebar).

The statistic that tends to be of most use when monitoring a process plant using PCA is termed SPE (square prediction error). SPE provides a measure of how the relationships between the process variables compare with those identified under normal operating conditions.

As a simple example, consider a fermentation process in which the pH in the vessel is controlled by manipulating the acid feedrate. PCA would identify that the pH is related to the acid flowrate. If a fault developed on the pH sensor at a later date, the relationship between the pH measurement and acid flow would change. This change would result in an elevated SPE value. The SPE value should be small under normal conditions, and its rise would indicate that the process measurements are behaving abnormally.

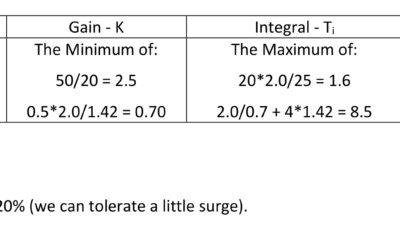

In this square prediction error chart, values above 95% warn that the condition of the process is abnormal; a value of 99% or above represents an action limit indicating that the process is behaving abnormally and that steps should be taken to address the problem.

The second multivariate approach, PLS, is a regression tool that identifies cause-and-effect relationships in process systems that contain many highly correlated variables. Such relationships can be difficult to identify using more traditional regression tools, such as multiple linear regression (MLR). PLS, however, is able to extract the relationships in process data that can be exploited to estimate difficult-to-measure quality variables.

Fermentation study

In this application, PCA is applied to monitor a fermentation process. Fermentation processes contain living organisms and, as a result, can be extremely unpredictable and very sensitive to process change. A pH sensor fault, for example, can quickly lead to problems. The fault may result in the pH being controlled to an inappropriate value that can result in the death of the organisms. By detecting process faults before significant process impact, corrective action can be taken to recover the batch.

“SPE chart” graphic shows the SPE statistic calculated during a particular fermentation batch. The upper chart in this figure shows the absolute value of the SPE and the lower chart shows the corresponding statistical value of this limit—a value above 95% on this chart provides a warning that the condition of the process is abnormal; a value of 99% or above represents an action limit indicating that the process is behaving abnormally and that steps should be taken to address the problem. In the chart, SPE values between 95-99% are yellow and values above 99% are red.

In this particular batch, the SPE statistic becomes red approximately one quarter of the way through the batch, thus indicating a potential problem in the process. Further scrutiny of this abnormal event is possible through analysis of a contribution chart, which indicates the contribution each process variable has made to the abnormality. Knowing this information can help identify instruments or processes that are the root cause.

PCA was applied to data collected from many more fermentation batches and it was found that, of those batches that resulted in low yield production, PCA could identify abnormal conditions in more than 25% of the batches. In contrast, traditional SPC techniques were only able to identify problems in 7% of the batches. Had information from the multivariable analysis been known during the low yield batches, corrective action may have been possible and the yield increased.

Steel furnace study

A batch operated process forms part of SSAB Oxelösund’s steel-making plant. The process studied reduces the carbon levels in iron by blowing oxygen into a bath to form low-carbon steel. When the carbon levels have been reduced to a low level, the oxygen flow into the furnace has the undesirable effect of oxidizing the iron. The challenge for the process operator is to switch off the oxygen flow rate at the optimum time—the point at which the maximum amount of carbon has been removed from the iron without significant oxidization within the furnace.

In-process iron oxide content measurement is key to consistent steel production. The green line is the estimated iron oxide concentration per the model, and the magenta line is the actual iron oxide concentration.

No on-line quality measurements are available to help the operator make decisions because of the harsh environment within the furnace. Accurate timing of the oxygen shut-off would allow the carbon content target to be achieved more closely, reduce batch cycle time, and increase product consistency.

The objective for this study was to use the measurements. Measurements of the raw materials entering the furnace and the on-line measurements recorded during the batch provide an estimate of the iron content in the slag. During the final stages of the batch, this value should increase to indicate that oxidization is occurring in the furnace, and oxygen flow should be stopped at this point.

Nearly 100 variables were measured in this process. Correlations among variables rendered traditional identification algorithms unsuitable. However, PLS was able to identify a model of this system to estimate the final iron oxide concentration in the slag. Since an iron oxide measurement was only made available at the end of each batch cycle, accuracy of the estimate provided by the PLS model improved as the batch neared the end of the cycle.

An example of the on-line estimate provided by this model is illustrated in the “On-line FeO content estimate” graphic. The green line in this figure is the estimated iron oxide concentration made by the model, and the magenta line is the actual iron oxide concentration made by the laboratory at the end of the batch.

Multivariate statistical models

Tracking penicillin yield in the fermentation vessel shows that the concentration of the product increases at a far greater rate when a predictive controller is used, as opposed to the process operating under open-loop control.

Advanced control systems, model predictive control (MPC) in particular, are applied with increasing frequency in the chemical and process industries and have been shown to improve process performance. Unfortunately, the difficulty in establishing accurate models of batch processes has prevented wide use of this technology for such systems. With multivariate statistical models, such as those identified in the studies above, it is possible to develop accurate models of batch processes and apply model predictive control to batch processes.

To illustrate the potential of this approach, consider its application to a simulation of a penicillin fermentation process. The control of penicillin formation in such systems is typically achieved in an open-loop manner by maintaining operating conditions at pre-specified trajectories. Such control, however, is unable to maximize product yield and cope with process disturbances adequately.

In contrast, the use of model predictive control provides the mechanism to automatically manipulate variables within the fermentation vessel to improve plant yield and counter plant disturbances. “Open loop vs. predictive control productivity comparison” graphic shows how the use of a model predictive controller can improve the penicillin yield in the fermentation vessel—the concentration of the product within the fermentation vessel increases at a far greater rate when a predictive controller is used, as opposed to the process operating under open-loop control.

In this study, productivity was found to increase 40% through the use of the model predictive controller. In addition, variation in productivity from batch to batch was reduced through the use of the automatic controller, thereby improving product consistency.

For more information on related topics, visit www.controleng.com or Perceptive Engineering at www.monitormv.com

Author Information

Dr. Barry Lennox and Dr.Ognjen Marjanovic are affiliated with the School of Engineering, University of Manchester, United Kingdom; David Sandoz, technology advisor, and David Lovett, managing director, are with Perceptive Engineering Ltd., Cheshire, United Kingdom.

Principal component analysis

When PCA is applied to a data set, it identifies artificial variables—linear combinations of the original process variables. These linear combinations, referred to as scores, represent major data variations. The number of scores that are calculated on any given data set is equal to the number of measured variables. The first score is calculated so that it will capture the major features, or patterns, in the data with the final score identifying less important information, such as process noise. Through this analysis, the information contained within the many process variables will be contained within a much smaller number of artificial variables. The formula for these artificial variables is given as:

T=XP

Where T is a matrix of artificial variables, with size m x n ( m is the number of observations made of the original data and n is the number of process variables). X is a matrix, also of size m x n , containing the original process variables and P is a matrix of coefficients referred to as loadings, of size n x n .

Having identified what we believe to be the important scores, we can then reverse the above equation to recompute the original variables as:

where P r columns of P , with r being the number of scores considered to be important and X is a matrix containing the re-computed original variables.

Under normal conditions, X and X should be similar. However, under abnormal conditions, the two matrices would show significant differences. Such an increase is identified by monitoring the SPE statistic. SPE is the sum of the square error between X and X .