While IT networks already use virtualization extensively, its benefits are moving into industrial networks. Five diverse viewpoints on its advantages and implementation are offered.

The topic of virtualization for manufacturing environments is a major discussion, so we are approaching it from a variety of viewpoints. Below are the stories written from industry professionals and their viewpoints on virtualization.

- Getting more from less, Joel Conover, Cisco

- Virtualization lifecycle considerations, Paul Hodge, Honeywell Process Solutions

- Breaking chains to improve delivery, Grant Le Sueur, Invensys Operations Management

- Building reusable engineering, Anthony Baker, Rockwell Automation

- Preparing your power distribution system, Jim Tessler, Eaton

Key concepts

- Virtualization represents a huge advance in industrial network design and implementation.

- This technology is well proven in IT applications and is moving into manufacturing.

- A thorough understanding of the technology and appropriate planning are key to effective implementation.

Go online

- Read this story online for additional discussion of each topic.

- Get more information from the companies involved:

www.eaton.com

https://iom.invensys.com

Getting more from less

Virtualization and virtual machines can improve equipment utilization while reducing expenses.

Joel Conover

During challenging economic times, accomplishing more with fewer resources can help manufacturers with their very survival. Virtualization and virtual machines are technologies that are widely accepted in enterprise IT systems, and can help with the operational efficiencies of deploying, upgrading, and maintaining systems on the plant floor. Virtualization consolidates workloads to raise utilization levels and reduce operating, capital, space, power, and cooling expenses. When deploying virtualization on a converged network, manufacturers can gain greater ease of management while boosting application performance.

Ease of management

In a virtualized environment, control systems, servers, and services can be deployed more rapidly than physical ones without requiring hours or days of tedious and potentially error-prone manual configuration. By using role- and policy-based management models available in various unified system management software platforms, such as Cisco UCS Manager, design engineers and control system operators can implement complex changes or new deployments in minutes. This provides greater flexibility to change, upgrade, or reconfigure manufacturing designs on a system. In addition, virtualization allows for efficient remote administration with different views into security and policy implementations that can be extended to the plant floor based on the identity of the remote administrator. Thus, design engineers or control system engineers can administer, upgrade, or move one element without endangering the rest of the overall IT-integrated system.

For example, Cisco’s approach with UCS Manager offers role-based management models to maintain separation of IT and control system disciplines already established with most manufacturers. Using a policy-based model, network administrators can define all networking policies, which can be later incorporated and referenced by server administrators without involving their network administrator colleagues. Meanwhile, design engineers are freed to focus on business process as defined by the manufacturing execution system, rather than on the details of individual system configuration.

Availability and security

Virtualized systems can improve the availability of manufacturing systems, avoiding downtime through techniques such as automatic restart of failed virtual machine instances and automatic fault-tolerant failover to a different virtual machine on the same, or different, hardware platform. In a business environment where downtime equals lost revenue, these capabilities can significantly mitigate the risk of downtime from technology failure.

Security is much more flexible in virtualized systems, and can be managed by policy templates. A common deployment technique is to isolate virtual machines on virtual LANs. With this technique, different systems can have different security levels. Access can be restricted for data which might be reasonably open (analytics), somewhat restricted (control systems), or even severely restricted (robotics).

Application performance

Virtualization lets you put the power and performance where it is needed. While some people may be skeptical about the efficiency of shared resources, real-world deployments have proven such skepticism as unwarranted.

Virtualization also improves application performance by adapting to changing conditions. If a server application requires more memory or CPU resources, they can be allocated dynamically from idle or unused allotments. Such adaptation is impossible with fixed, physical servers running memory-intensive applications like databases or over-utilized web servers.

Whereas most enterprise systems have a 3- to 5-year renewal cycle, the cycle for manufacturing floors is much longer, typically 10-20 years. Virtualization allows for incremental replacement or upgrade of software or hardware with minimal disruption to manufacturing operations. Virtualization permits better long-term planning, reduced costs, and better return on investment.

A side benefit of server virtualization is that the same infrastructure can be used to provide hosted virtual desktops (HVD). Virtual desktops preserve security by storing all data on the hosting server—only screens are exchanged—so proprietary data remains secure in the data center. HVDs also can allow plant floor managers to bring in remotely located experts via video to quickly troubleshoot problems or provide advice.

Virtualization is a mature technology and has been used in enterprise environments and service provider data centers for many years. It has proven to save both time and money, but reaping the benefits depends on the right underlying business infrastructure. The first step is to build a converged Ethernet network, architected and tested to ensure that real-time voice, video, and data exchange services operate flawlessly together.

Companies such as Cisco, its partners, and others offer manufacturing-specific designs and product offerings that allow you to deploy converged networks and virtual systems with confidence. The benefits of a well-architected converged network extend far beyond the plant floor, enabling manufacturers to bring the right expertise and skill together for greater agility and faster decision making throughout the value chain.

Joel Conover is senior director of industry marketing, manufacturing, retail, and financial services for Cisco.

Virtualization lifecycle considerations

This new approach can help keep some older platforms operating longer.

Paul Hodge

Most industrial process users seek to maximize the length of time they can stay on a particular control system platform for two reasons: increase ROI (return on investment) and reduce the amount of disruption to operations. Virtualization is a key technology that can assist in reducing the frequency of hardware refreshes, the cost of each refresh, and the impact to process operations when a refresh occurs, each of which accomplishes the goal of increasing ROI and mitigating operation disruptions.

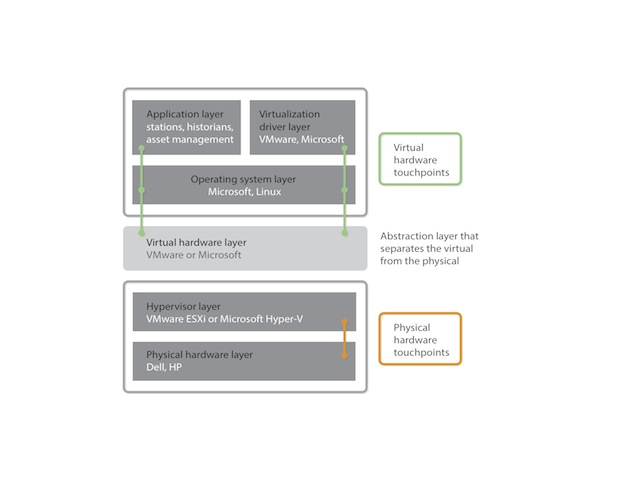

When considering how virtualization can improve operations, users should consider the lifecycle implications of several layers. These include:

- Physical hardware

- Hypervisor (VMware, Hyper-V, etc.)

- Virtual hardware

- Virtualization drivers

- Operating system, and

- Application.

Physical hardware

Virtualization provides abstraction or separation of the operating system and application layers from the physical hardware. This reduces the dependencies that operating systems and applications have on hardware, and gives users improved choices for determining which supplier or platform will best meet their performance, reliability, and support goals. However, users need to be aware that there is a new dependency between the hypervisor and the hardware. Therefore, it is important to consider the compatibility between the hypervisor and the chosen hardware and how long that hardware combination will be supported.

Hypervisor

This is a small software layer that runs the virtual machines, which can have a different lifecycle from other layers. Users need to take into account its lifecycle and corresponding support from the application vendor, particularly the frequency with which the hypervisor needs to be patched or upgraded. This is because, unlike a normal operating system that impacts only one application, taking a hypervisor down for maintenance may impact multiple operating systems. The ability to complete these updates in a way that is nondisruptive to operations is also important, and the ideal solution provides continued application execution during updates.

All major hypervisor manufacturers allow for continued application execution during upgrading and patching; however, users should check to ensure that these extended features are supported by the application vendors. Without this capability, maintenance windows will need to be found to patch or upgrade the hypervisor when necessary.

Virtual hardware

Virtual hardware is a software-emulated hardware layer that sits between the hypervisor and the operating systems used to run the applications. It emulates a motherboard with particular graphics, USB, sound, and other capabilities. Depending on the hypervisor supplier, this layer can have a separate release and support cycle. This is important as virtual hardware touches the operating system and applications inside the virtual machine. Extended stability in this layer can bring about simplified qualification of operating system/application solutions and reduced retesting.

Virtual drivers

Think of virtual drivers as regular drivers for virtual hardware instead of physical hardware. Because these drivers touch the operating system and application layers, the frequency of change and stability in the drivers is an important consideration.

Operating system

Virtualization can extend the life of certain operating systems, and applications that depend on them, by allowing them to run on hardware that otherwise would not provide support. New hardware that supports Windows XP, for example, is very difficult to source. Yet it can be run virtually on new physical hardware as long as the hypervisor vendor supports Windows XP. An additional benefit of virtualization comes from not having to re-install the operating system when upgrading the physical hardware. But keep in mind that, in general, an operating system vendor’s physical or virtual support policy is the same. So, running a virtualized operating system does not allow for longer periods of support.

Application

Similar to the operating system layer, running virtualized applications can provide additional flexibility by allowing them to run on hardware normally unsupported by the application vendor. However, there are additional considerations:

- In most cases virtualized applications will not change the duration that a given application is supported.

- The application vendor needs to have flexibility in support policy to allow the hypervisor to run on multiple hardware platforms.

- While a vendor may support multiple hardware platforms, it may still have standardized offerings to aid in support and project deployment, among other areas.

To obtain the new types of flexibility that virtualization provides, additional layers are required and the lifecycle implications of these layers need to be understood. With the correct support from their application vendors, users can achieve better ROI from their process control system lifecycle while reducing the amount of disruption to operations.

Paul Hodge is virtualization product manager for Honeywell Process Solutions.

Breaking chains to improve delivery

Virtualization can bridge distance and make resources available anywhere.

Grant Le Sueur

While virtual computing has been an indispensible utility within the IT industry for several years, manufacturing and process automation industries have been slower to accept and recognize its benefits. However, as the technology evolves, we’re beginning to see the obvious impact it can have on a plant environment. In fact, virtualization will soon change how automation providers develop, deliver, and help commission their systems and solutions.

Breaking the fixed sequence

In general, a fixed sequence of events rules the lifecycle of a project. First, there is a delivery phase, where the system is designed to match production requirements, equipment is procured, the system is staged and tested, the system is validated and shipped, and then the plant is commissioned. After that, the operational phase kicks in, where the new system is supported, hardware is replaced when it reaches its end of life, and the system is upgraded, particularly with new software capabilities.

By enhancing both of these phases, virtualization improves the traditional delivery model. While engineering and testing used to be sequential activities that could occur only where the actual equipment was located, virtualization allows providers to decouple activity and location. It enables delivery activity to occur anywhere in the world, effectively breaking the tether between engineers and the physical hardware. Individuals with specialized talent can now apply their skills to system engineering no matter where they are located, even if they are thousands of miles away from the hardware!

Benefits to delivery

In the delivery phase, virtualization gets a system up and running significantly faster than by procuring and installing hardware and software in the traditional manner. Not only are fewer machines required, but in some cases engineering can commence earlier using a common virtual machine. Hardware that would normally be deployed on-site only after the system has been designed can be procured and delivered to the facility for early installation, independent of engineering configuration. Because virtual machines can be instantiated simply by copying files, engineers can create an entire system in the virtual environment, and they can do it within hours, not days or weeks. No longer does engineering have to occur where the system is going to be staged—it can be done anywhere in the world, and the system can be staged and tested in a place that is nearer or more convenient for the end user.

Moreover, if multiple process designers or contractors are involved, testing and validating can be done concurrently at several locations instead of only where the equipment is. That means the system hardware can be shipped to the site for early installation even while the system is still being tested. After testing, it can be dispatched electronically. Last-minute updates and modifications can be handled in the same way. All of these capabilities mean the plant can be commissioned earlier—the entire delivery schedule has been compressed.

Bring operational problems to the expert

Whether we like it or not, sometimes hardware fails, and components always wear out. After the plant has been commissioned, the new system often exhibits faults that may not be easy to resolve at the facility. Virtualization allows these problems to be brought to the expert. System faults can be located and resolved more easily with an offline shadow system that mirrors the online production system. Additionally, virtual machines can be used to provide updates automatically using fault-tolerant configurations, or they can be copied manually to a replacement server host. The technology also allows system updates to take place on an offline virtual system, and those images can then be installed onto a production host server within minutes, instead of hours, weeks, or days.

A no-brainer

Given all these benefits, applying virtualization within manufacturing and process automation industries might the biggest no-brainer of the decade. In fact, some experts suggest that by 2020, very few new systems will be delivered outside of a virtualized environment, while many incumbent systems will be modified to encompass virtualization technologies. It’s already changing the face of how automation providers develop and deliver their solutions and how the end user puts them to use. Are you ready?

Grant Le Sueur is director of product management for Invensys Operations Management.

Building reusable engineering

Using templates with virtual machines can save reinventing your software wheel.

Anthony Baker

VMware vCenter templates provide opportunities for corporate engineering, system integrators, and other engineering teams to standardize work and simplify deployment of common system elements across multiple sites and customers. Templates provide a common starting point for the installation and configuration of automation software. Deploying a control system can take days of effort per workstation and/or server: it takes time to plan out system architecture, check for compatibility, install and patch the OS (operating system), and configure application content. Each of these steps also introduces risk if not done correctly.

With virtualization, the time and risk can be reduced drastically. Through the use of virtual machine (VM) templates, deployment can take minutes instead of days. Templates for workstations and servers are provided by some automation vendors with predefined software and OS builds or can be created by users for their specific systems.

Duplicate existing configurations

VMware vCenter Server allows system administrators to create templates from an existing VM. Your engineers can build a standardized VM for different defined workstations, such as operator or engineering workstations, that contain a defined build of OS and software content. This standardized VM is turned into a template and becomes a “golden image” for deployment for the rest of the system stations during implementation. It can also be reused in the future should the user decide to expand.

When expanding in the future, the user no longer has to worry about locating the exact installation media and hardware or worry about compatibility with the rest of the system. If the user requires an additional operator workstation, he or she can simply deploy a new VM from the template at any time.

Standardizing an image in such a manner can be extremely beneficial in validated industries. It reduces the amount of effort that needs to go into testing each station—not just on initial installation, but over the lifetime of the system when stations need to be maintained and replaced. Should the hardware (e.g., a thin client) fail at a station, it can be replaced without change to the image, reducing the need to revalidate the OS and software portion of that station.

Choosing the right applications

To determine when it makes sense to create a template, a user should keep a few things in mind. Not all software or services are appropriate for this type of deployment. Microsoft SQL Server and Windows components, such as the Active Directory, should not be installed on a template. Software packages that cannot tolerate changes, such as computer name and/or IP addresses, may cause conflicts if the user would like to replicate multiple instances in the same system.

VMware vCenter Server utilizes Sysprep for creating templates based on Windows OSs. Each version of Windows requires its own version of Sysprep that can be found on the install media provided or downloaded from the Microsoft website.

Each time an instance is deployed from a template with Sysprep loaded in vCenter Server, the user is able to customize the new VM. Upon initial boot, the user will be prompted to enter the information for computer name, network configuration (workgroup/domain), administrator credentials, and Windows licensing as required. Once this setup process is completed, the VM will reboot and bring the user to the desktop of the new instance, ready for use.

As concepts such as reusable engineering are increasingly promoted to improve engineering efficiency, VM Templates will prove to be an interesting opportunity for system deployment.

Anthony Baker is PlantPAx characterization and lab manager for Rockwell Automation.

Preparing your power distribution system

Virtualization places different demands on your infrastructure than traditional architecture.

Jim Tessier

By increasing the utilization level of servers, virtualization brings potential to deliver incredible savings in terms of server count, footprint, power consumption, and cooling requirements. However, in order to fully reap these benefits without sacrificing electrical reliability, a few important power distribution challenges must be addressed.

For one, overall power consumption will be lower, but it will be of higher variability and concentration. For example, on an un-virtualized platform, the average server CPU runs at only 10%-15% of capacity. With virtualization, that figure increases to about 70%-80%. As CPU utilization increases, so does power consumption per server.

System availability becomes all the more important as servers are pressed to carry these larger workloads. To protect servers, increase the density of enclosure-level power protection and distribution. Enclosure-based power modules are available that can distribute up to 36 kW in only a few U (rack units) of rack space. These cover four to 45 receptacles in an organized manner to meet the needs of a wide range of power densities.

Additionally, modern enclosure-based PDUs (power distribution units) are available that can view the status of each circuit securely from anywhere on the company intranet or the Internet, in order to provide automated alerts of potential trouble.

Increasing power demands

Further up the power system, you’ll want to ensure existing circuits are capable of supporting high-density computing systems. Traditionally, facility managers could plan for about 60 to 100 W of power consumption per U of rack space, so a full rack of equipment averaged 3 to 4 kW. Today’s blade servers have escalated that figure to 600 to 1,000 W per U, which is steadily growing and may soon reach up to 40 kW per rack.

Five or 10 years ago, a typical computer room was designed to feed one 20 A, 208 V circuit to each rack, or less than 3.5 kW per rack. If you now have to support 20 kW of equipment in each rack, it could take up to six of these 20 A circuits. The existing electrical infrastructure will be unable to support this load growth, and could easily run out of circuits or run out of capacity, especially with the growing prevalence of dual- and triple-corded loads.

You can solve this problem by building out a power subdistribution strategy. Instead of running individual cable drops from your large UPS and PDUs to each rack, run higher powered subfeed circuits to an intermediate remote power panel (RPP), power distribution rack (PDR), or rack-mounted power distribution device, and from there to enclosures.

Visibility into demand

Although the power system adjustments suggested will help ensure servers receive adequate power and circuit overload is prevented, it remains highly important to increase your level of visibility into how the movement of applications affects power and vice versa. After all, application demands affect power consumption, and power consumption affects application availability and performance—yet rarely do these two interdependent groups work in unison.

This issue can be addressed with 24×7 power quality metering, monitoring, and management at the branch circuit level, which can be conducted at several points in the data center power distribution system. With this type of universal perspective, you can easily see when irregular conditions threaten processes, and react accordingly to prevent system damage or downtime.

Virtualization enables a facility to meet business objectives with fewer physical servers, which results in dramatic energy and physical footprint savings. However, successful implementation of any virtualization project requires that you prepare for dynamic changes in power demands, higher power densities, and the critical need to have oversight of application movement. Fortunately, proven solutions are available that address these power considerations and allow facilities to extract the full potential of virtualization while ensuring that power demands and business needs are always met.

Jim Tessier is a virtualization product manager for Eaton.

Key concepts

- Virtualization represents a huge advance in industrial network design and implementation.

- This technology is well proven in IT applications and is moving into manufacturing.

- A thorough understanding of the technology and appropriate planning are key to effective implementation.

Go online

- Read this story online for additional discussion of each topic.

- Get more information from the companies involved:

www.eaton.com

https://iom.invensys.com