Familiar examples show how and why proportional-integral-derivative controllers behave the way they do.

KEYWORDS -Process control-Control theory-Controllers-Loop controllers-PID-Tutorial diagrams

A feedback controller is designed to generate an output that causes some corrective effort to be applied to a process so as to drive a measurable process variable towards a desired value known as the setpoint. The controller uses an actuator to affect the process and a sensor to measure the results.

Virtually all feedback controllers determine their output by observing the error between the setpoint and a measurement of the process variable. Errors occur when an operator changes the setpoint intentionally or when a disturbance or a load on the process changes the process variable accidentally. The controller’s mission is to eliminate the error automatically.

An example

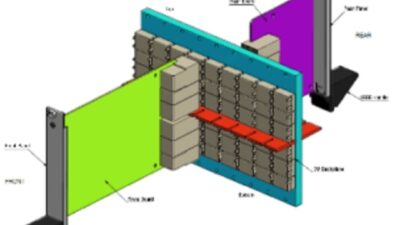

Consider for example, the mechanical flow controller depicted above. A portion of the water flowing through the tube is bled off through the nozzle on the left, driving the spherical float upwards in proportion to the flow rate. If the flowrate slows because of a disturbance such as leakage, the float falls and the valve opens until the desired flow rate is restored.

In this example, the water flowing through the tube is the process, and its flowrate is the process variable that is to be measured and controlled. The lever arm serves as the controller, taking the process variable measured by the float’s position and generating an output that moves the valve’s piston. Adjusting the length of the piston rod sets the desired flowrate; a longer rod corresponds to a lower setpoint and vice versa.

A mechanical flow controller manipulates the valve to maintain the downstream flow rate in spite of the leakage. The size of the valve opening at time t is V(t) . The flowrate is measured by the vertical position of the float F(t) . The gain of the controller is A/B . This arrangement would be entirely impractical for a modern flow control application, but a similar principle was actually used in James Watt’s original fly-ball governor. Watt used a float to measure the speed of his steam engine (through a mechanical linkage) and a lever arm to adjust the steam flow to keep the speed constant.

Suppose that at time t the valve opening is V(t) inches and the resulting flowrate is sufficient to push the float to a height of F(t) inches. This process is said to have a gain of G p = F(t)/V(t) . The gain of a process shows how much the process variable changes when the controller output changes. In this case,

F(t) = G p V(t)

Equation [1] is an example of a process model that quantifies the relationships between the controller’s efforts and its effects on the process variable.

The controller also has a gain G c , which determines the controller’s output at time t according to

V(t) = G c (F max – F(t))

The constant F max is the highest possible float position, achieved when the valve’s piston is completely depressed. The geometry of the lever arm shows that G c = A/B , since the valve’s piston will move A inches for every B inches that the float moves. In other words, the quantity (F max – F(t)) that enters the controller as an input ‘gains’ strength by a factor of A/B before it is output to the process as a control effort V(t) .

Note that controller equation [2] can also be expressed as

V(t) = G c (F set – F(t)) + V B

where F set is the desired float position (achieved when the flow rate equals the setpoint) and V B = G c (F max – F set ) is a constant known as the bias . A controller’s bias represents the control effort required to maintain the process variable at its setpoint in the absence of a load.

Proportional control

Equation [3] shows how this simple mechanical controller computes its output as a result of the error between the process variable and the setpoint. It is a proportional controller because its output changes in proportion to a change in the measured error. The greater the error, the greater the control effort; and as long as the error remains, the controller will continue to try to generate a corrective effort.

So why would a feedback controller have to be any more sophisticated than that? The problem is a proportional controller tends to settle on the wrong corrective effort. As a result, it will generally leave a steady state error (offset) between the setpoint and the process variable after it has finished responding to a setpoint change or a load.

This phenomenon puzzled early control engineers, but it can be seen in the flow control example above. Suppose the process gain G p is 1 so that any valve position V(t) will cause an identical float position F(t) . Suppose also the controller gain G c is 1 and the controller’s bias V B is 1. If the flow- rate’s setpoint requires F set to be 3 inches and the actual float position is only 2 inches, there will be an error of (F set – F(t)) = 1 inch. The controller will amplify that 1 inch error to a 2 inch valve opening according to equation [3]. However, since that 2 inch valve opening will in turn cause the float position to remain at 2 inches, the controller will make no further change to its output and the error will remain at 1 inch.

The same mechanical controller now manipulates the valve to shut off the flow once the tank has filled to the desired level F set . The controller’ gain of A/B has been set much lower, since the float position now spans a much greater range.

Integral control

Even bias-free proportional controllers can cause steady-state errors (try the previous exercise again with G p = 1, G c = 2, and V B = 0). One of the first solutions to overcome this problem was the introduction of integral control. An integral controller generates a corrective effort proportional not to the present error, but to the sum of all previous errors.

The level controller depicted above illustrates this point. It is essentially the same float-and-lever mechanism from the flow control example except that it is now surrounded by a tank, and the float no longer hovers over a nozzle but rests on the surface of the water. This arrangement should look familiar to anyone who has inspected the workings of a common household toilet.

As in the first example, the controller uses the valve to control the flowrate of the water. However, its new objective is to refill the tank to a specified level whenever a load (i.e., a flush) empties the tank. The float position F(t) still serves as the process variable, but it represents the level of the water in the tank, rather than the water’s flowrate. The setpoint F set is the level at which the tank is full.

The process model is no longer a simple gain equation like [1], since the water level is proportional to the accumulated volume of water that has passed through the valve. That is

Equation [4] shows that tank level F(t) depends not only on the size of the valve opening V(t) but also on how long the valve has been open.

The controller itself is the same, but the addition of the integral action in the process makes the controller more effective. Specifically, a controller that contains its own integral action or acts on a process with inherent integral action will generally not permit a steady-state error.

That phenomenon becomes apparent in this example. The water level in the tank will continue to rise until the tank is full and the valve shuts off. On the other hand, if both the controller and the process happened to be pure integrators as in equation [4], the tank would overflow because back-to-back integrators in a closed loop cause the steady-state error to grow without bound!

The blue trace on this strip chart shows the error between the process variable F(t) and its desired value F set . The derivative control action in red is the time derivative of this difference. Derivative control action is zero when the error is constant and spikes dramatically when the error changes abruptly.

Derivative control

Proportional (P) and integral (I) controllers still weren’t good enough for early control engineers. Combining the two operations into a single ‘PI’ controller helped, but in many cases a PI controller still takes too long to compensate for a load or a setpoint change. Improved performance was the impetus behind the development of the derivative controller (D) that generates a control action proportional to the time derivative of the error signal.

The basic idea of derivative control is to generate one large corrective effort immediately after a load change in order to begin eliminating the error as quickly as possible. The strip chart in the derivative control example shows how a derivative controller achieves this. At time t1, the error, shown in blue, has increased abruptly because a load on the process has dramatically changed the process variable (such as when the toilet is flushed in the level control example).

The derivative of the error signal is shown in red. Note the spike at time t 1 . This happens because the derivative of a rapidly increasing step-like function is itself an even more rapidly increasing impulse function. However, since the error signal is much more level after time t 1 , the derivative of the error returns to roughly zero thereafter.

In many cases, adding this ‘kick’ to the controller’s output solves the performance problem nicely. The derivative action doesn’t produce a particularly precise corrective effort, but it generally gets the process moving in the right direction much faster than a PI controller would.

Combined PID control

Fortunately, the proportional and integral actions of a full ‘PID’ controller tend to make up for the derivative action’s lack of finesse. After the initial kick has passed, derivative action generally dies out while the integral and proportional actions take over to eliminate the remaining error with more precise corrective efforts. As it happens, derivative-only controllers are very difficult to implement anyway.

On the other hand, the addition of integral and derivative action to a proportional-only controller has several potential drawbacks. The most serious of these is the possibility of closed-loop instability (see ‘ Controllers must balance performance with closed-loop stability ,’ Control Engineering , May 2000). If the integral action is too aggressive, the controller may over-correct for an error and create a new one of even greater magnitude in the opposite direction. When that happens, the controller will eventually start driving its output back and forth between fully on and fully off, often described as hunting . Proportional-only controllers are much less likely to cause hunting, even with relatively high gains.

Another problem with the PID controller is its complexity. Although the basic operations of its three actions are simple enough when taken individually, predicting just exactly how well they will work together for a particular application can be difficult. The stability issue is a prime example. Whereas adding integral action to a proportional-only controller can cause closed-loop instability, adding proportional action to an integral-only controller can prevent it.

PID in action

Revisiting the Flow control example, suppose an electronic PID controller capable of generating integral and derivative action as well as proportional control has replaced the simple lever arm controller. Suppose too a viscous slurry has replaced the water so the flow rate changes gradually when the valve is opened or closed.

Since this viscous process tends to respond slowly to the controller’s efforts-when the process variable suddenly differs from the setpoint because of a load or setpoint change-the controller’s immediate reaction will be determined primarily by the derivative action, as shown on the Derivative control example. This causes the controller to initiate a burst of corrective efforts the instant the error moves away from zero. The change in the process variable will also initiate the proportional action that keeps the controller’s output going until the error is eliminated.

After a while, the integral action will begin to contribute to the controller’s output as the error accumulates over time. In fact, the integral action will eventually dominate the controller’s output, since the error decreases so slowly in a sluggish process. Even after the error has been eliminated, the controller will continue to generate an output based on the accumulation of errors remaining in the controller’s integrator. The process variable may then overshoot the setpoint, causing an error in the opposite direction, or perhaps closed-loop instability.

If the integral action is not too aggressive, this subsequent error will be smaller than the original, and the integral action will begin to diminish as negative errors are added to the history of positive ones. This whole operation may then repeat several times until both the error and the accumulated error are eliminated. Meanwhile, the derivative term will continue to add its share to the controller output based on the derivative of the oscillating error signal. The proportional action also will come and go as the error waxes and wanes.

Now replace the viscous slurry with water, causing the process to respond quickly to the controller’s output changes. The integral action will not play as dominant a role in the controller’s output, since the errors will be short lived. On the other hand, the derivative action will tend to be larger because the error changes rapidly when the process is highly responsive.

Clearly the possible effects of a PID controller are as varied as the processes to which they are applied. A PID controller can fulfill its mission to eliminate errors, but only if properly configured for each application.

For more information on control loop analysis and tuning , visit www.controleng.com.

Consulting Editor, Vance J. VanDoren, Ph.D., P.E.,is president of VanDoren Industries, West Lafayette, Ind.

Comments? E-mail [email protected]